The “AI is failing” headline has become predictable. Executives cite disappointing pilots. Boards question budgets. Teams quietly shelve tools that never made it to production. Yet beneath these stories lies a simpler problem: most organisations never defined what success looks like before they started.

The “AI is Failing” Story is Mostly a Measurement Problem

AI failure headlines often blur the line between technology and business integration. While language models perform well in benchmarks, they may hallucinate, show bias, or struggle with proprietary workflows—especially in regulated fields like pharmacovigilance. The real issue is whether organisations have done the work required to capture value.

When we say an AI project “failed,” we usually mean the pilot never reached production, the tool reached production, but nobody adopted it, or adoption occurred, but nobody measured the outcome. None of these is a technology failure. They are planning failures.

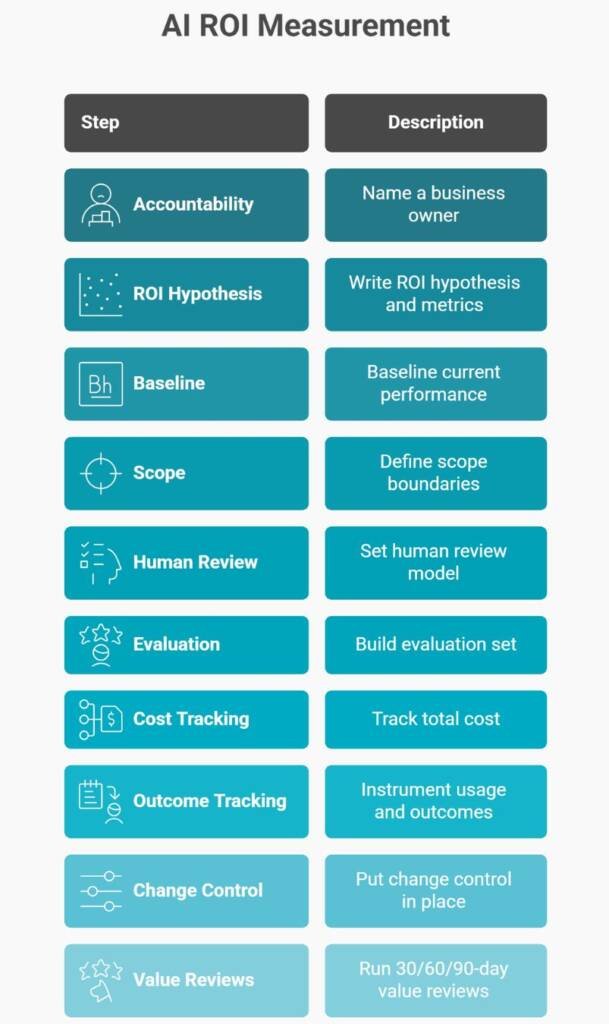

The path forward requires treating ROI as a design requirement—not a retrospective hope. Organisations that define metrics, set baselines, and name accountable owners before deployment move from pilot theatre to production value.

What the “95%” Claim Actually Says—and What It Doesn’t

Project NANDA at MIT Media Lab’s State of AI in Business 2025 report presented a headline statistic: 95% of task-specific generative AI implementations fail to achieve marked and sustained measurable P&L impact, based on 52 structured interviews and 153 survey responses—a sample size and methodology that independent researchers have questioned regarding statistical validity and generalizability.

This finding describes a ‘GenAI Divide’ separating successful implementers from stalled experiments; however, the research methodology relies on self-reported interview data rather than independently verified company records, and multiple analysts have called for full methodological disclosure, noting that enterprise IT projects historically experience comparable or higher failure rates.

What this means: the vast majority of pilots produce no measurable financial return. The biggest problem was not the model’s capability. The research team identified a ‘learning gap’ as one contributing factor; however, the full scope of failure causes includes data quality issues, poor process definition, inadequate change management, cost underestimation, and adoption resistance—suggesting the gap reflects broader organisational capability limitations rather than knowledge deficit alone.

Additionally, in regulated environments such as the pharmaceutical industry, organisations must navigate compliance requirements that generic enterprise AI frameworks do not address, creating a learning gap specific to GxP-regulated AI implementation.

What this does not mean: AI never works, or the technology is fundamentally flawed. GenAI doesn’t fail in the lab. It fails in the enterprise when it collides with vague goals, poor data, and organisational inertia—though it is worth noting that enterprise IT projects broadly experience 80-90% failure rates, suggesting AI pilot performance may not be uniquely problematic when contextualised against historical digital transformation outcomes.

Pilot Success vs Production Success: A demo proves capability. Production proves value. The gap requires integration, adoption, and measurement—none of which happen automatically.

The Real Misconception: ROI Isn’t a Feeling—It’s a Design Requirement

Organisations struggle to find AI ROI because they never designed for it. Common traps include defining ROI after the pilot concludes, claiming benefits as “potential” rather than measured, operating without a baseline, and watching value disappear through non-adoption.

Productivity benefits can lag while organisations retool processes and retrain staff. This makes early ROI difficult to capture—unless measurement was built into the project from the start.

ROI requires three things before deployment: a hypothesis stating the expected outcome, a baseline capturing current performance, and decision rules defining what happens at review.

Where Do You Start? A Low-Risk Pathway to Prove ROI

Step 1: Pick a Workflow, Not a Technology

Step 2: Write an ROI Hypothesis

Step 3: Create the Baseline

Step 4: Set Decision Rules

How AI is Used in Business Workflows—and What to Control

| Use case | AI application examples | Governance requirements |

|---|---|---|

Adverse Event Processing | Narrative ingestion, coding, signal detection | ALCOA+ data integrity, audit trail immutability, full traceability of all AI actions, validated source capture, human review checkpoints |

Regulatory Submission Support | CTD generation, synopsis drafting, IMPD support | Change control on templates, quality review approval, validation of references, role-based access controls, documented sign-off workflow |

Safety Monitoring & Signal Detection | Real-time adverse event pattern recognition, trend monitoring | Quantified performance metrics, false positive monitoring, bias testing, HITL escalation rules, ongoing model monitoring with periodic re-validation |

Quality Investigation Reports | Root cause analysis drafting, trending analysis | Human investigator sign-off, editable AI-suggested content, controlled prompts, source traceability, audit trail of edits and approvals |

Drafting | Emails, policies, proposals | Templates, approval rules, prompt versioning |

Compliance Checks | Automated document compliance reviews, deviation detection | Defined rulesets, human compliance review for exceptions, validated checklists, version-controlled requirements library, audit-ready evidence capture |

Training & Enablement | Onboarding material creation, SOP summarisation | Content review and approval, controlled knowledge sources, avoidance of hallucinations via grounded retrieval, periodic refresh and re-approval |

Analytics & Reporting | KPI dashboards, trend summaries, executive reporting | Validated calculations, consistent definitions, human review of insights, governance of data sources, controlled access and audit trails |

Knowledge Management | Searchable SOP/QMS retrieval, FAQ copilots | Controlled document sources, permissioning, citation requirements, content lifecycle management, periodic validation of knowledge accuracy |

WHY THIS MATTERS IN REGULATED ENVIRONMENTS

In pharmaceutical contexts, a checklist, like the above, is not optional—

It is foundational. I believe regulatory inspectors will increasingly scrutinise AI implementations.

The difference between inspection success and enforcement action hinges on whether your organisation can demonstrate:

✓ Pre-deployment governance frameworks (not retrospective risk assessment)

✓ Baseline and monitoring data proving consistent performance

✓ Documented human oversight for safety-critical decisions

✓ Change control procedures for model updates, training data, or prompt changes

✓ Clear accountability ownership (named individuals, not committees)

Generic AI pilots that lack these controls create two problems:

1. You cannot prove value (measurement failure)

2. You cannot defend decisions (compliance failure)

An AI implementation in pharmacovigilance that fails both tests is worse than no

implementation at all—it creates regulatory exposure and patient-safety risks.

The discipline outlined in this article prevents both outcomes.

Why AI Projects Don’t Deliver ROI: The Repeatable Root Causes

- “Use Case” is Actually a Vague Wish – Many pilots begin with statements like “use AI to improve customer service” rather than defined decisions and metrics. Without specificity, success cannot be measured.

- Pilot Theatre – The proof-of-concept works in a demo. Nobody planned for deployment, integration, or adoption. Flashy pilots that never escape the lab are home to the 95% failure rate.

- Data and Process Reality Are Ignored – If inputs are messy and process steps are inconsistent, outputs will not scale. AI systems can amplify both positive and negative process characteristics; however, successful implementations often include concurrent workflow redesign and organisational change management, suggesting that AI, when combined with process reengineering, can transform broken workflows rather than simply amplifying existing patterns.

- Costs Aren’t Counted Honestly – Budgets capture licensing and development but miss engineering time, security reviews, change management, and evaluation effort. The true cost is often 2 to 3 times the visible budget.

- No Evaluation Harness – Without a test set, error taxonomy, and acceptance thresholds, value cannot be proven.

- Adoption is Assumed – People do not trust the tool, do not know when to use it, or find that it does not fit their workflow.

- Risk Controls Arrive Late – Privacy, security, and compliance reviews must occur at project initiation, not as final gates. In healthcare and pharmaceutical contexts, this requirement is not optional.

Practical Controls Checklist: Turn AI Effort into Measurable ROI

Ethics, Privacy, and Practical Caveats

Efficiency gains do not justify over-automation. In pharmaceutical and pharmacovigilance contexts, all decisions affecting product quality, adverse event assessment, regulatory compliance, or patient safety require human expert judgment with documented oversight—not merely ‘high-impact’ decisions.

Partial automation of individual process steps may be appropriate; however, end-to-end workflow automation without human validation points creates patient safety risks and regulatory non-compliance. The definition of ‘high-impact’ should default to conservative interpretation: when in doubt, require human review.

Protect confidential data. Define accountability before deployment. Build review points into workflows where judgment matters.

AI Isn’t Failing—Your ROI Method Is

- The 95% failure rate reflects a crisis of fundamentals, not technology. AI pilots stall because organisations skip the discipline that makes measurement possible: defined hypotheses, documented baselines, named owners, and clear decision rules.

- The fix is straightforward. Define ROI early. Measure properly. Build adoption and controls into the deployment plan. Decide fast—scale, iterate, or stop—based on evidence rather than hope.

- Organisations that treat AI implementation as an engineering discipline rather than an experiment will capture the value that continues to elude the majority.

Common Questions and Answers

What is required to validate AI in GxP environments?

Risk-based validation, ALCOA+ data integrity, performance metrics with confidence intervals, test data independence, audit trails, and documented human oversight procedures.

What is ISO 42001 and why does it matter for pharmaceutical AI?

ISO/IEC 42001:2023 is the voluntary international standard for AI management systems, requiring organizations to document governance, risk management, and ethical oversight of AI.

When should privacy and compliance reviews occur in AI projects?

Privacy, security, and compliance reviews must occur at project initiation, not as final gates; retrofitting controls after deployment creates regulatory exposure in pharmaceutical contexts.

Do you need separate validation for AI vs. traditional software?

Yes—AI systems require additional validation on bias, drift detection, explainability, and retraining protocols; existing medical device regulations are “largely appropriate” but require targeted refinements.

Why do 95% of AI pilot projects fail in enterprises?

Lack of baseline measurement, undefined success metrics, poor adoption planning, and missing human oversight—not technology inadequacy—cause 95% of generative AI pilots to stall.

What is “pilot theatre” in AI projects?

Flashy proof-of-concept demonstrations that never transition to production because deployment, integration, and adoption planning were skipped; 95% of AI pilots fail this way.

What is an ROI hypothesis in AI implementation?

A clear hypothesis stating: “If we apply X to Y, we expect Z% improvement in metric M within N weeks”—written before deployment, with baseline and guardrail metrics defined.

Why are enterprises losing confidence in LLMs?

Hallucinations, unpredictable drift, lack of determinism, and high failure rates in regulated environments have shifted enterprise focus from generative AI to deterministic automation.

What is the difference between generative AI and deterministic AI?

Generative AI produces unpredictable outputs; deterministic AI follows fixed rules and guardrails, producing reproducible results—essential for regulated environments.

What is the “AI confidence crisis” in enterprises?

Despite $37B annual spending, 80% of companies report AI implementation failures; governance gaps, measurement problems, and adoption resistance—not technology—drive the crisis.

IMPORTANT DISCLAIMER

This article is provided for educational and informational purposes only. It is intended to support general understanding of regulatory concepts and good practice and does not constitute legal, regulatory, or professional advice.

This framework provides general business guidance for AI implementation.

Before implementing any AI system in organisations subject to TGA regulation,

ICH guidelines, ISO 42001, or other GxP compliance requirements, organisations

must engage qualified regulatory affairs, quality, and GxP compliance professionals

to conduct a comprehensive risk assessment and design appropriate controls.

GxP Vigilance and the authors accept no liability for regulatory non-compliance,

patient safety incidents, or inspection findings arising from the framework application

without proper expert oversight.