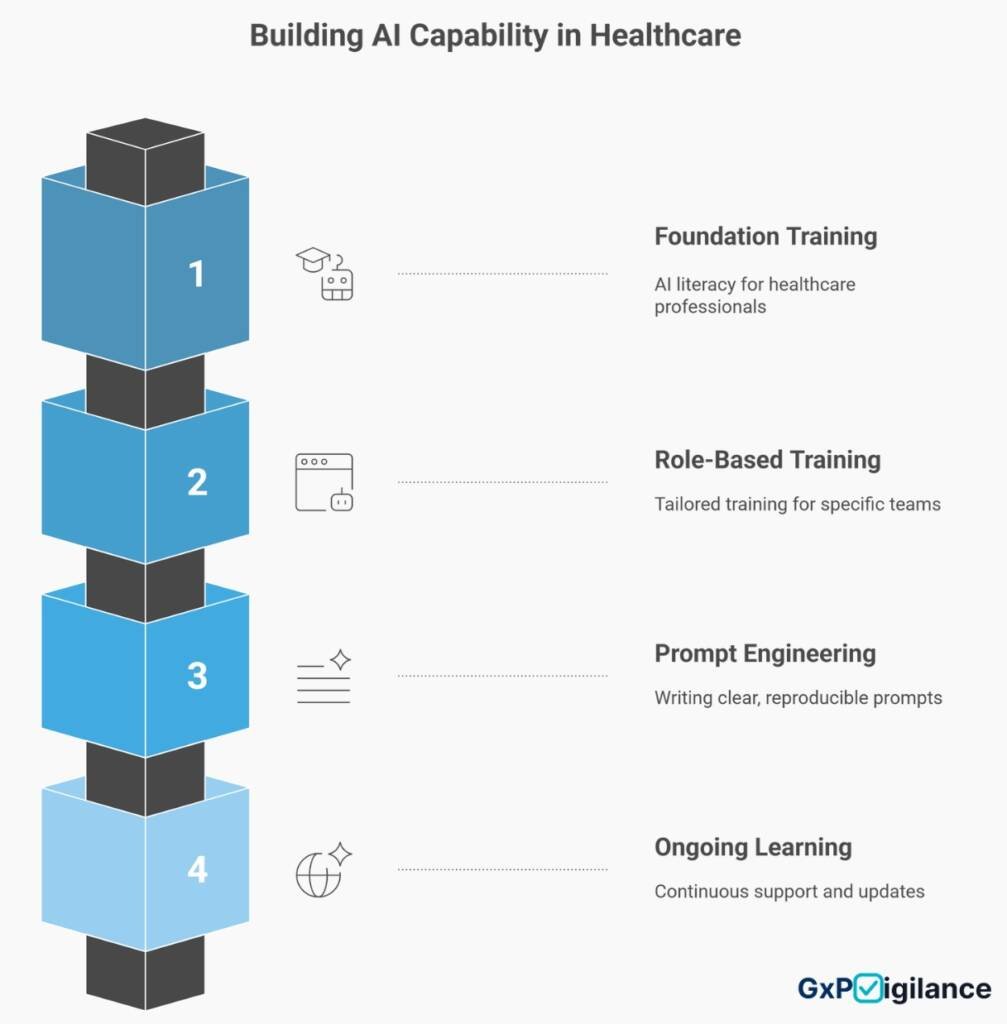

AI Enablement & Capability Building

When Your Team Wants AI But Doesn’t Know Where to Start

Your quality manager asks if they can use ChatGPT to draft CAPA reports. Your regulatory team wonders whether AI could speed up submission writing. Your clinical operations staff see colleagues at other companies using AI tools and worry you’re falling behind.

But nobody knows what’s actually allowed. Nobody understands the risks. Nobody can explain how to use these tools without breaking your data integrity controls or creating audit problems.

The questions keep coming: Can we use this vendor’s AI feature? How do we document AI-assisted work? What happens if the AI makes a mistake? Who’s responsible when things go wrong?

You need your team confident and competent using AI safely within your regulatory constraints—not guessing, not improvising, not creating compliance problems you’ll discover during your next inspection.

I’m Carl Bufe—The AI-Native GxP Practitioner. I don’t deliver generic AI workshops and disappear. I build practical AI capability within your team through role-specific training, clear documentation standards, and ongoing support that keeps everyone aligned to your quality systems and regulatory obligations.

What AI Enablement Actually Means

AI enablement means your people understand:

- Which AI tools fit which tasks — and which applications create unacceptable risk

- How to write effective prompts that produce useful, verifiable outputs

- What documentation standards apply when AI assists with regulated work

- Where human oversight sits and who takes final accountability

- How to spot problems — bias, hallucinations, data leakage, performance drift

- What your SOPs and governance framework require before using any AI system

This isn’t theoretical knowledge. Your team learns by doing—with your actual data, your real workflows, and your quality management system controls.

What Training Delivers

Immediate Capability

Teams work confidently within approved boundaries. They know what’s allowed, what requires approval, and what’s prohibited. They ask the right questions before adopting new tools.

Risk Awareness

People recognize when AI outputs need verification, when to escalate concerns, and how to document their decisions. They understand the difference between helpful assistance and dangerous shortcuts.

Documentation Competency

Every AI-assisted task includes proper audit trails. Source citations, version control, approval evidence, and change history become automatic habits—not afterthoughts discovered during inspection preparation.

Sustainable Adoption

Your team maintains and improves AI workflows without constant consultant support. They assess new use cases, update documentation as regulations evolve, and train new staff on established practices.

Phase 5: — Building Internal AI Champions

For Leaders Ready to Architect Solutions

- Some organisations need more than AI users—they need internal champions who can design, build, and validate AI systems within GxP constraints. This advanced track develops that capability.

Agent Building and Workflow Automation

- Leaders learn to construct AI agents that execute multi-step workflows: literature surveillance systems that screen, classify, and route articles; CAPA intelligence agents that analyse deviation patterns and suggest root causes; regulatory intelligence systems that monitor authority websites and summarise updates. We cover agent architecture, tool integration, error handling, and validation approaches.

System Design Under GAMP 5

- Training includes risk-based categorisation of AI systems, validation lifecycle planning, change control integration, and performance monitoring frameworks. Your champions understand how to build systems that auditors can assess and approve.

Strategic Implementation Planning

- Leaders develop the capability to evaluate vendor AI offerings, assess internal build-versus-buy decisions, prioritise use cases by risk and value, and create phased rollout plans that balance innovation with regulatory safety.

Establishing Internal Governance

- Champions learn to run approval committees, conduct risk assessments for new AI applications, review validation evidence, and maintain the governance framework as your AI portfolio grows. This track produces internal experts who lead AI adoption strategically—not opportunistically—while maintaining the quality standards your operations demand.

Who This Training Help

- Pharmaceutical Companies are introducing AI to drug safety, quality, or regulatory operations.

- Clinical Research Organisations exploring AI for monitoring, patient selection, or protocol development

- Pharmacovigilance Vendors are building team capability to deliver AI-enhanced services competently.

- Hospital & Research Teams adopting clinical decision support or research automation tools.

- Pharmacy Operations implementing AI assistance for medication review or compounding safety

- Quality & Compliance Teams preparing for AI-related inspection questions

What Makes This Different

- Practitioner-Led, Not Vendor-Driven

I’m a working QPPV and pharmacist—not an AI company selling platforms. Training focuses on safe use within your regulatory reality, not product features. - Australian TGA Context

Every example, every risk discussion, every documentation standard references local regulatory expectations. Your team learns what Australian inspectors will ask. - Built On Your Systems

Training uses your actual tools, data, and workflows. Generic corporate AI courses don’t translate to pharmaceutical compliance. This does. - Capability Transfer, Not Consultant Dependency

You own the knowledge. Your team runs the systems. We provide support and updates, but you control your AI capability independently. - Measured Outcomes

We track competency through assessments, monitor adoption through usage data, and measure impact through time savings and quality metrics. Training effectiveness is visible, not assumed.