In July 2025, the Pharmaceutical Inspection Co-operation Scheme (PIC/S) and European Medicines Agency (EMA) published Draft Annex 22 for consultation—the first dedicated GMP framework governing how AI and machine learning systems must be validated in pharmaceutical manufacturing. Following a three-month consultation period that closed October 7, 2025, the final version is currently being developed based on stakeholder feedback

If you manufacture medicines under GMP and use AI anywhere affecting batch disposition, quality control, or release decisions—PIC/S Annex 22 now defines what “validated” means for your systems.

Quick Reference: PIC/S Annex 22 at a Glance

| Aspect | Summary |

|---|---|

Primary Standard | PIC/S GMP Annex 22: Artificial Intelligence (July 2025, consultation draft) |

Regulatory Scope | AI/ML systems used in active substance and medicinal product manufacturing that can affect product quality, patient safety, or data integrity |

Implementation Timeline | Expected mandatory enforcement 2026–2027 (consultation period through late 2025) |

Complexity Level | High — requires cross-functional expertise in GMP, data science, quality systems, and validation |

Common Applications | Automated visual inspection; real-time release testing; predictive maintenance of critical systems; in-process control adjustments |

Entities Covered | PIC/S; EMA; TGA (as PIC/S member); FDA (aligned principles); ISO 42001; GAMP 5; ICH E6(R3); EU GMP Chapter 4; Annex 11 |

Why Annex 11 Was Never Enough for AI

Short Answer: Annex 11 (Computerised Systems) governed pharmaceutical software using principles designed for deterministic systems—identical inputs producing identical outputs every time. AI doesn’t work that way. Machine learning models find patterns in data and make predictions based on probabilities. Some systems learn continuously from new data, adapting behaviour during operation. This unpredictability created a regulatory gap that Annex 22 now closes by addressing the probabilistic, data-driven nature of AI.

Traditional software validation assumed reproducibility. A classification model might produce different outputs from identical inputs depending on training data, hyperparameters, or ongoing learning. When your automated system classifies batches or flags deviations, regulators need absolute confidence that decisions are reproducible, explainable, and traceable. Annex 11 lacked vocabulary to govern “black box” algorithms. PIC/S Annex 22 resolves this by drawing clear lines: some AI architectures are acceptable in critical applications if properly controlled. Others are prohibited entirely.

What PIC/S Annex 22 Actually Regulates (and What It Doesn’t)

Annex 22 applies to all AI/ML models in medicinal product manufacturing, but strictest requirements govern critical applications—systems with direct impact on product quality, patient safety, or data integrity. Critical applications include batch disposition, automated quality control, deviation classification, and release testing. Non-critical applications, such as SOP drafting, can leverage broader AI capabilities with qualified Human-in-the-Loop (HITL) oversight.

The central pivot is “Criticality.” Critical applications require strict controls: Automated Visual Inspection for sterility, Real-time Release Testing, predictive maintenance for sterile barriers, In-Process Control to adjust Critical Process Parameters, automated quality decisions, and deviation classification. Non-critical applications—such as SOP drafting, training materials, project planning, and historical analysis—allow broader AI use but require HITL review.

The decisive test: if AI directly affects whether medicine reaches patients, strictest controls apply. If human experts must review all outputs before GMP action, lighter controls may suffice.

The Non-Negotiable Rule: Static, Deterministic Models Only

Short Answer: PIC/S Annex 22 prohibits dynamic models that continue learning during operation, probabilistic systems producing variable outputs, and generative AI in critical decision-making. Only static AI models with deterministic outputs—parameters frozen after training, identical inputs producing identical results—are acceptable for critical GMP use.

In scope (acceptable concept):

- Static (“frozen”) models — no learning/adaptation during operation

- Deterministic outputs — identical inputs → identical outputs

- Operated under change/configuration control with defined re-test triggers

Not to be used in critical GMP (per Annex 22 scope statements)

- Dynamic models that continuously/automatically learn in use

- Probabilistic/non-deterministic outputs (same input may not yield the same output)

- Generative AI / LLMs in critical GMP applications

Why this matters: Prevents “moving target” validation risk: deployed behaviour must remain stable unless formally changed and re-assessed.

If you’ve deployed adaptive AI evolving with production data, that architecture is non-compliant. Fundamental redesign is required before mandatory enforcement.

What Counts as a “Critical AI Application” Under Annex 22

Short Answer: If AI can stop or release a batch, it’s critical. Applications directly affecting product quality, patient safety, or data integrity require full Annex 22 compliance. Examples include batch disposition decisions, QC result interpretation, automated deviation assessment, stability predictions, and contamination detection. These demand static models, complete validation evidence, and continuous performance monitoring.

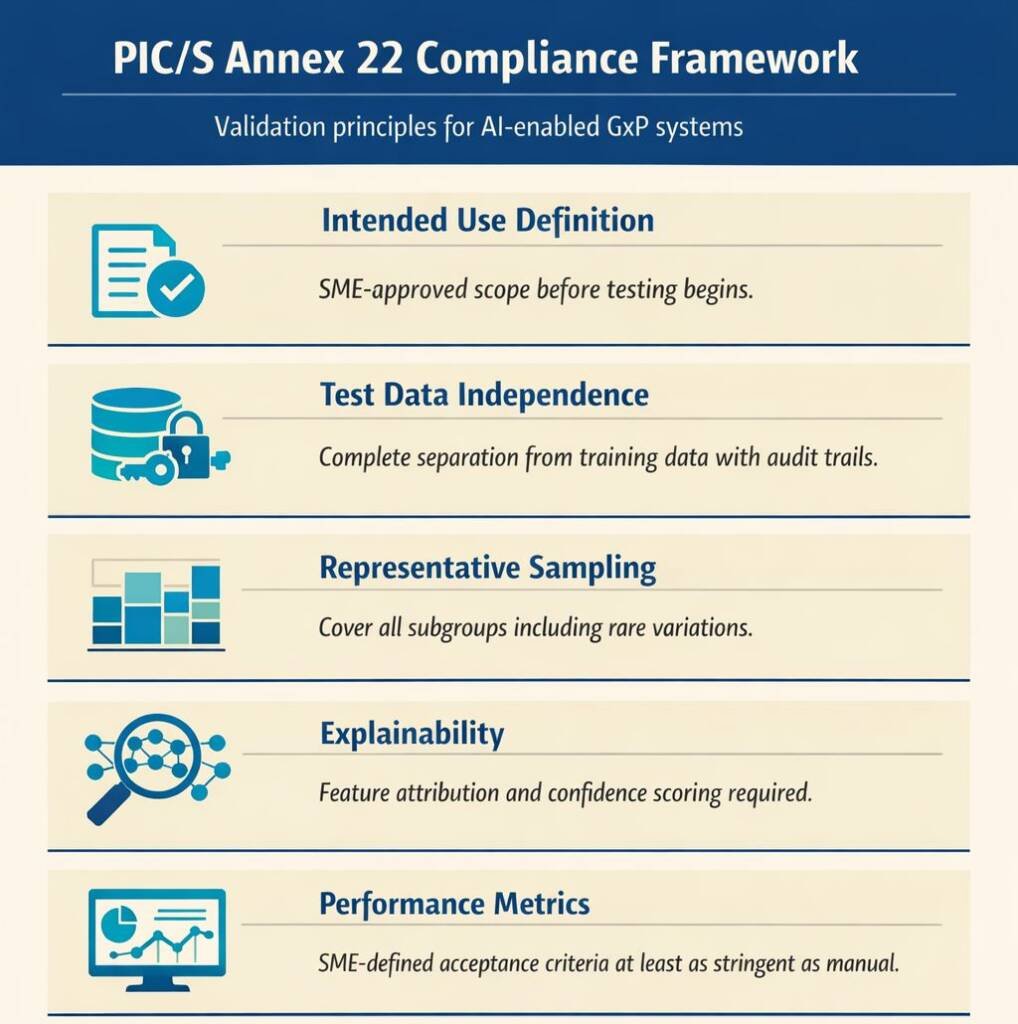

Core Validation Requirements Under Annex 22.

Short Answer: Compliance rests on five mandatory pillars:

- SME-approved Intended Use Definition

- Test Data Independence with technical controls,

- Representative Sampling covering edge cases,

- Explainability and Confidence Scoring,

- Performance Metrics are as stringent as manual processes.

Together, these create traceable, reproducible AI validation.

Pillar 1: Intended Use Definition

Every model requires comprehensive documentation approved by process SMEs before testing. This specifies: what the model predicts, expected input data and variations, limitations and biases, defined subgroups, and operator responsibilities. This foundation determines validation scope and acceptance criteria—no SME approval means no testing.

Pillar 2: Test Data Independence

Most validation fails here. Test data must be completely independent from training/validation datasets. Technical and procedural controls required: access restrictions with audit trails, four-eyes principle, version control, and independence documentation. If developers see test data, they can optimise to pass tests rather than demonstrate real-world performance.

Pillar 3: Representative Sampling and Verified Labelling

Test data must cover complete input space—not just common scenarios. Stratified sampling covering all subgroups, rare variations, boundary conditions, and edge cases. Labels require independent expert verification, validated measurements, laboratory results, and SME cross-validation. No validating against incorrectly labelled data.

Pillar 4: Explainability and Confidence Scoring

Critical applications must explain decisions. Feature attribution (SHAP, LIME, heat maps) shows which inputs drove classifications—auditors verify appropriate feature use, not spurious correlations. Confidence scoring flags “undecided” outputs when below thresholds, escalating to human review for situations outside validated experience.

Pillar 5: Performance Metrics and Acceptance Criteria

SMEs define metrics at least as stringent as replaced processes before testing: confusion matrix, sensitivity, specificity, accuracy, precision, F1 score, and task-specific metrics. If you can’t quantify current manual performance, you can’t validate AI replacement.

Validation Does Not End at Go-Live: Ongoing Operational Controls

Short Answer: Annex 22 mandates continuous controls: change control for modifications, configuration management, detecting unauthorised changes, performance monitoring for drift, and input space monitoring, ensuring data stays within validated boundaries. These maintain “validated state” throughout operational life.

- Change Control: Any model, system, or process change requires revalidation and evaluation. Model retraining, new data sources, process parameter changes, and infrastructure updates all trigger assessment. Decisions not to retest require documented technical justification—”vendor approved” isn’t acceptable.

- Configuration Management: Version control for models, training scripts, and validation artefacts. Access restrictions, audit trails, and checksums ensure production matches the validated configuration.

- Performance Monitoring: Continuous tracking against validated metrics with automated alerts for degradation. Statistical process control detects gradual deterioration. When drift is detected, escalate to QA for investigation and potential revalidation.

- Input Space Monitoring: Verify operational data stays within validated ranges. Statistical measures detect distribution shifts. Alerts flag out-of-range inputs. Escalation when novel feature combinations appear. This prevents “silent failures” in encountering untrained situations.

Australian Context: Why TGA Inspectors Will Expect Annex 22 Alignment

Short Answer: While TGA hasn’t formally adopted Annex 22, Australia’s PIC/S membership means inspectors know these expectations and will align practices with PIC/S guidance. Proactive implementation demonstrates leadership, reduces future disruption, and builds inspection-defensible systems.

Australia joined PIC/S in 2013, committing to align with its guidance. TGA inspectors receive PIC/S training and reference standards during inspections. Manufacturers waiting for formal adoption face compressed timelines and the risk of non-conformances.

| Phase | Strategic Implementation Roadmap |

|---|---|

Months 1–2 (Foundation) | Inventory AI systems across GMP. Classify critical vs. non-critical. Assess gaps against Core Validation Requirements Under Annex 22. Prioritise by patient safety risk. |

Months 3–4 (Governance) | Establish a cross-functional committee (QA, SMEs, IT, data science, regulatory). Develop AI governance policies. Create validation templates. Define change control triggers. |

Months 5–6 (Pilot) | Deploy independence controls and explainability tools. Build drift detection. Select low-risk pilot. Work through complete Annex 22 process, document lessons. |

Months 7–12 (Scale) | Apply lessons to additional systems. Develop role-specific training. Establish monitoring cadences. Prepare inspection evidence. |

Annex 22 aligns with ISO 42001:2023 (AI Management Systems). Pursuing both creates cohesive governance satisfying global expectations.

Common Missteps We’re Already Seeing

- “The vendor validated it”; You cannot delegate validation responsibility. Obtain complete documentation: intended use, test independence confirmation, explainability evidence, performance metrics, and change procedures.

- Test data leakage: Splitting test from shared pool without access controls.

- No SME-approved intended use: Starting testing before SMEs approve the scope and limitations.

- Black-box models with no explainability: Deploying complex models without feature attribution or confidence scoring. Inspectors will ask, “Why did it decide this?”—and you must answer.

The Bottom Line—Annex 22 Is an Enabler, Not a Barrier

PIC/S Annex 22 isn’t blocking pharmaceutical AI adoption—it’s creating framework for sustainable innovation. By establishing clear boundaries and rigorous controls, manufacturers deploy AI confidently while protecting patients and product quality.

The guidance reflects regulatory maturity. Innovation is welcome within controlled parameters.

Organisations starting implementation now will be inspection-ready when Annex 22 becomes mandatory. More importantly, they’ll build reliable, explainable AI systems aligned with pharmaceutical quality principles.

The era of regulated AI in pharmaceutical manufacturing has arrived. The question isn’t whether to comply—it’s how quickly you build governance, processes, and culture, making compliance automatic.

If you’re using or planning AI in GMP environments, the question isn’t whether Annex 22 applies—it’s whether your current controls would stand up in front of an inspector.

Frequently Asked Questions

1. Can I continue using my existing automated visual inspection system after Annex 22 becomes mandatory?

It depends on the system’s architecture and your validation evidence. If your system uses static models (parameters frozen after training),

produces deterministic outputs, and you have documented validation evidence meeting the five pillars, it may comply with minimal updates.

If the system uses dynamic learning, continuously adapts to new data, or you lack independent test data and explainability evidence,

you’ll need substantial modification or replacement. Conduct a gap assessment now—retrofitting compliance is significantly harder than building it in from the start.

2. What counts as “independent” test data if we’re a small manufacturer with limited production history?

Test data independence requires technical and procedural separation from training/validation datasets. For small manufacturers, this might mean:

(1) selecting test samples from different time periods than training data, (2) using different equipment or facilities if available,

(3) deliberately collecting edge cases and rare variations, or (4) using shared industry datasets if relevant to your process.

The critical requirement is preventing anyone with access to training data from seeing test data before validation completes.

Document your independence strategy and the rationale for why it represents unbiased model assessment.

3. Does Annex 22 prohibit using ChatGPT or other generative AI anywhere in pharmaceutical manufacturing?

Not entirely. Annex 22 prohibits generative AI in critical applications—systems directly affecting product quality, patient safety, or data integrity

(batch release, quality decisions, deviation classification). Generative AI is acceptable in non-critical applications like drafting SOPs,

generating training summaries, or project planning—but only with mandatory Human-in-the-Loop review and approval. The human reviewer must have sufficient expertise to identify errors, verify accuracy, and take responsibility for the final output. Never allow generative AI to make or directly influence critical GMP decisions.

4. How do I demonstrate “explainability” for a complex neural network model used in quality control?

Annex 22 doesn’t require full mathematical interpretability of every parameter. It requires:

(1) feature attribution showing which inputs most influenced each decision (using tools like SHAP values or attention mechanisms),

(2) confidence scoring indicating when the model is uncertain, and (3) SME ability to verify the model uses appropriate features for decision-making.

For example, if your model classifies defects, explainability evidence might show the model focuses on relevant visual features (shape, texture, size) rather than spurious correlations (timestamp, operator ID). Document which explainability techniques you’re using and how SMEs verify appropriate feature usage.

5. What happens if my AI model’s performance gradually degrades during routine operation?

This is called “model drift” and Annex 22 requires continuous monitoring to detect it. When performance metrics fall outside validated acceptance criteria:

(1) automated alerts must trigger immediate investigation, (2) the system should escalate to quality assurance with documented performance data,

(3) quality must evaluate whether continued operation is acceptable or if use must be suspended, (4) root cause investigation determines if retraining, revalidation, or retirement is needed.

The validated state cannot drift silently—monitoring and escalation must be documented and functioning. Plan for drift as an expected lifecycle event, not an exception.

6. Who counts as a “Subject Matter Expert” qualified to approve the Intended Use Definition?

SMEs must have both process knowledge and sufficient AI literacy. For a quality control AI application, this typically means:

(1) deep understanding of the manual process being automated, (2) knowledge of failure modes and edge cases, (3) understanding of how AI models make predictions (not coding skills, but conceptual knowledge), and (4) authority to approve validation documentation. Often this requires a team: a quality expert who understands the process, a data scientist who understands the model, and a validation specialist who ensures GMP compliance.

Document each person’s qualifications and specific contribution to the Intended Use approval.

7. Can I use vendor-supplied AI systems, or must I develop models in-house to meet Annex 22?

You can use vendor systems, but you cannot delegate validation responsibility. Under Annex 22, you must obtain from vendors:

(1) complete intended use documentation, (2) access to validation evidence including test data independence confirmation,

(3) explainability documentation showing how the model makes decisions, (4) performance metrics with acceptance criteria justification, and (5) change control procedures for model updates. Update vendor qualification requirements to include Annex 22 evidence.

Many existing vendor contracts won’t provide this level of documentation—renegotiation or vendor changes may be necessary.

References:

- EU GMP Annex 22 – Artificial Intelligence (Official Draft).

- PIC/S Guide to Good Manufacturing Practice for Medicinal Products (PE 009 Series)

- ICH Q9(R1) – Quality Risk Management

- EudraLex Volume 4 – EU Guidelines on Good Manufacturing Practice

- Q9(R1) Quality Risk Management (FDA).

Disclaimer

This article is provided for educational and informational purposes only. It is intended to support general understanding of regulatory concepts and good practice and does not constitute legal, regulatory, or professional advice.

Regulatory requirements, inspection expectations, and system obligations may vary based on jurisdiction, study design, technology, and organisational context. As such, the information presented here should not be relied upon as a substitute for project-specific assessment, validation, or regulatory decision-making.

For guidance tailored to your organisation, systems, or clinical programme, we recommend speaking directly with us or engaging another suitably qualified subject matter expert (SME) to assess your specific needs and risk profile.