The Growing Importance of Pharmacovigilance Literature Monitoring AI

Pharmacovigilance literature monitoring AI has transitioned from experimental pilot to operational necessity. PubMed alone indexes over 1.5 million new citations annually, rendering purely manual screening impractical for most marketing authorisation holders. Meanwhile, regulators expect comprehensive surveillance regardless of publication volume.

This creates a clear operational reality: teams must process more literature with the same resources while maintaining sensitivity to identify every reportable Individual Case Safety Report (ICSR). AI-enabled screening addresses this challenge directly, with validated implementations achieving 97% sensitivity and reducing manual workload by 40-50%.

However, efficiency gains mean nothing if they compromise compliance. The critical question facing pharmacovigilance teams in 2025 is not whether to implement AI for pharmacovigilance literature monitoring, but how to do so defensibly—with validation evidence, human oversight, and audit trails that satisfy TGA expectations.

Regulatory Requirements: Australian TGA Perspective

The Therapeutic Goods Administration expects sponsors to maintain systematic literature surveillance as part of their pharmacovigilance obligations. This aligns with EMA Good Pharmacovigilance Practices (GVP) Module VI, which explicitly permits automation provided accuracy and traceability are maintained.

The regulatory expectation centres on outcomes, not methods. Sponsors must demonstrate continuous screening of relevant literature, identification of publications containing reportable adverse events, timely ICSR submission, and complete audit trails documenting screening decisions.

Critically, even when AI accelerates screening, the Marketing Authorisation Holder remains accountable for case identification, data quality, and reporting compliance. Regulators do not prohibit AI—they prohibit inadequate documentation, missed cases, and unvalidated processes.

| Compliance Element | Regulatory Expectation | AI Implementation Requirement |

|---|---|---|

Search coverage | Comprehensive sources | Documented database selection |

Screening frequency | Risk-proportionate scheduling | Automated scheduling with logs |

ICSR identification | All valid cases captured | Sensitivity validation ≥95% |

Documentation | Complete audit trail | Logged decisions, model versions |

Why Literature Monitoring Challenges Demand AI Solutions

Manual literature screening is notoriously inefficient. Only a small fraction of search results yield valid ICSRs —often less than 5%. Pharmacovigilance scientists spend most of their screening time reviewing irrelevant publications rather than assessing genuine safety cases.

Teams face interconnected problems: volume overwhelm from thousands of daily publications, low yield despite extensive effort, consistency variability between reviewers, and duplicate complexity across databases. These challenges create concerning dynamics in which overworked teams may narrow search strategies, increasing the risk of missed cases.

AI addresses these challenges by handling high-volume, repetitive filtering—the task humans perform least consistently—while preserving human expertise for medical judgement where it matters most.

Best Practices for Effective Literature Monitoring

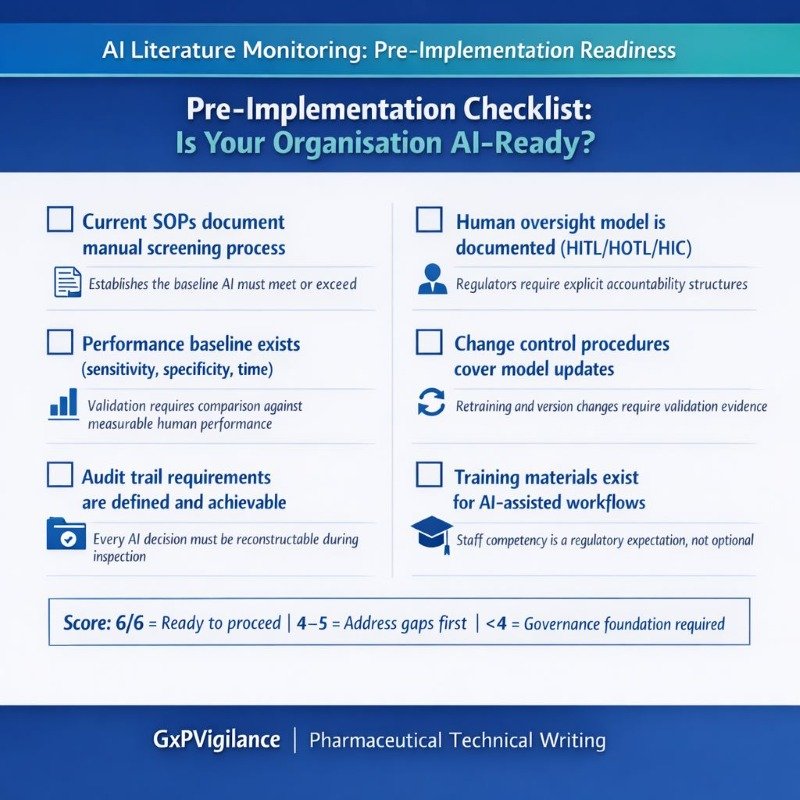

Before implementing an AI for monitoring pharmacovigilance literature, organisations must establish robust governance foundations. Technology amplifies existing processes; it cannot compensate for inadequate SOPs.

Essential Governance Elements:

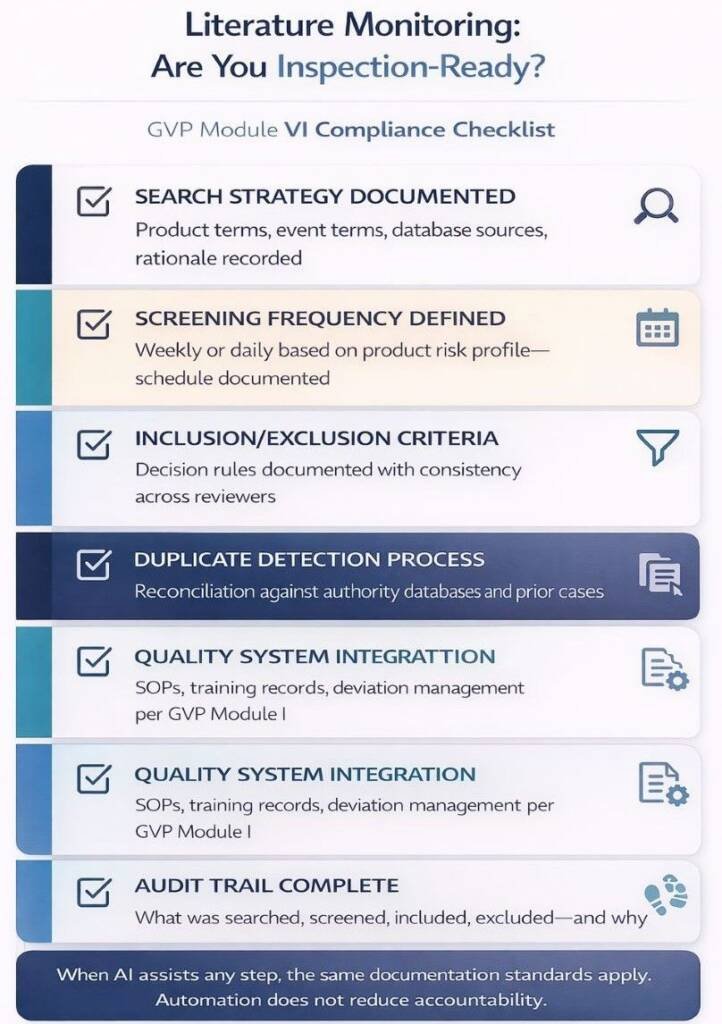

Effective literature monitoring requires documented processes that cover search-strategy definition, frequency scheduling, inclusion/exclusion criteria, duplicate management, and quality system integration. These elements align with GVP Module I quality system expectations.

- Current SOPs document the manual screening process

- Performance baseline exists (sensitivity, specificity, time)

- Audit trail requirements are defined.

- The human oversight model is documented.

- Change control procedures cover model updates.

- Training materials exist for AI-assisted workflows.

The Current Role of Pharmacovigilance Literature Monitoring AI

AI applications in literature monitoring have matured significantly. Current implementations span several complementary functions requiring distinct validation approaches.

- Automated Relevance Classification: AI models categorise abstracts as case-relevant or irrelevant. A 2024 evaluation of large language models achieved 97% sensitivity with 93% reproducibility. The 67% specificity means approximately one-third of flagged articles require human review—an acceptable trade-off compared to manually reviewing everything.

- Named Entity Recognition: Sophisticated systems identify specific ICSR elements: identifiable patient, reporter, suspect product, and adverse event, extracting structured data from unstructured narrative.

- MedDRA Coding Support: AI suggests appropriate adverse event codes. The WHODrug Koda system achieved 89% automation with 97% accuracy on 4.8 million entries.

- Hybrid Architectures: Modern implementations combine rules, retrieval-augmented generation (RAG), and LLM ensembles—improving controllability and reducing hallucination risk.

| Implementation | Sensitivity | Specificity | Workload Impact |

|---|---|---|---|

LLM screening (2024) | 97% | 67% | 70% reduction |

biologit MLM-AI | 95% | N/A | 40–49% savings |

Industry signal detection | 85% | 75% | 6-month earlier detection |

Benefits of AI in Pharmacovigilance Literature Monitoring

Validated AI implementations deliver measurable improvements across efficiency, consistency, and capability dimensions. These benefits translate directly into better resource allocation and improved safety outcomes.

Efficiency Gains:

AI-enabled screening compresses triage workload, enabling pharmacovigilance scientists to focus on medical assessment rather than repetitive filtering. Organisations report 50% reductions in literature review cycle times, resulting in faster ICSR identification and more timely regulatory reporting. For an organisation screening 10,000 annual publications, 70% filtering represents approximately 7,000 fewer articles requiring full human review.

Consistency Improvements:

Human reviewers naturally vary in relevance determinations—inter-reviewer agreement is never perfect. AI applies identical criteria to every publication, reducing intra- and inter-operator variability for structured filtering decisions. This consistency proves particularly valuable during staff transitions or when scaling operations.

Scalability During Surges:

Product launches and safety crises generate volume spikes that overwhelm manual capacity. COVID-19 vaccine surveillance demonstrated this clearly—organisations with AI-enabled workflows maintained compliance while manual processes faced significant backlogs. AI systems scale instantly to handle volume increases without proportional resource expansion.

Earlier Signal Detection:

Challenges and Risks of AI Adoption

Regulatory and Audit Concerns:

Technical Challenges:

Technical risks include false positives increasing downstream workload, hallucination risk (where LLMs generate plausible but incorrect information), interpretability limitations with complex models, and concept drift (where performance degrades as publication patterns evolve over time). Each requires specific mitigation strategies documented in your validation approach.

Data Quality Dependencies:

AI systems inherit biases from training data. If training datasets underrepresent certain populations, languages, or publication types, models may miss relevant cases from those categories. This bias risk requires explicit testing across demographic subgroups and therapeutic areas during validation.

Why This Matters:

CIOMS Working Group XIV warns that efficiency-focused automation can compromise the integrity of pharmacovigilance without adequate governance. The risk is not AI itself—it is poorly governed AI deployed without validation evidence or human oversight structures.

Human-in-the-Loop: Why PV Expertise Remains Essential

Regulators universally reject fully autonomous decision-making on safety. AI augments human expertise; it does not replace accountability.

CIOMS Human Oversight Models:

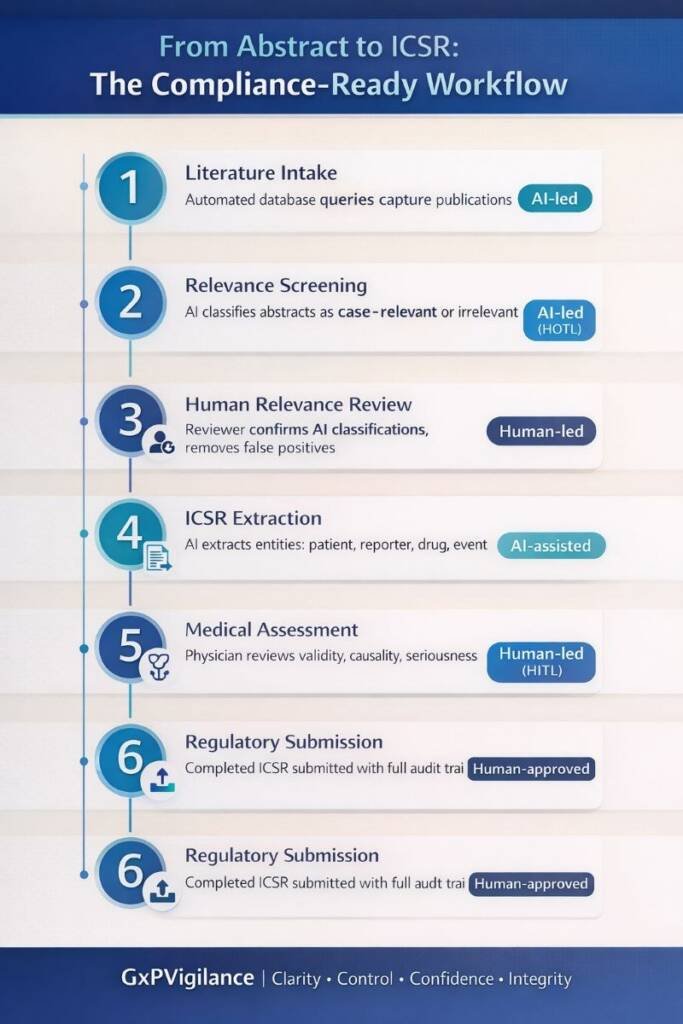

- Human-in-the-Loop (HITL): Humans make final decisions with AI providing recommendations. Mandatory for causality assessment, seriousness determination, and reportability decisions.

- Human-on-the-Loop (HOTL): AI executes decisions autonomously while humans monitor aggregate performance. Appropriate for relevance screening and initial filtering.

- Human-in-Command (HIC): Humans set parameters and monitor system performance. Reserved for low-risk applications like duplicate flagging.

For literature monitoring, a typical implementation combines HOTL for initial AI screening with HITL for ICSR confirmation and medical assessment—preserving efficiency while maintaining human accountability.

Future Trends: The Evolution of Literature Surveillance

Pharmacovigilance literature monitoring AI continues evolving toward broader data integration. AI-enabled surveillance is expanding beyond journals into real-world evidence sources: electronic health records, registries, and social media patient reports.

The EMA/HMA workplan (2023-2028) commits to developing AI guidance specific to pharmacovigilance. The EU AI Act classifies many pharmacovigilance applications as high-risk, triggering mandatory transparency and human oversight requirements.

Expect RAG-constrained PV copilots validated against internal SOPs rather than open-ended generative systems. Continuous drift monitoring will become a standard procedure. Bias testing will become an explicit validation requirement.

Conclusion: Balancing Innovation and Regulatory Responsibility

Pharmacovigilance literature monitoring AI delivers genuine efficiency improvements when implemented with appropriate governance. The evidence base—97% sensitivity, 40-50% workload reduction, earlier signal detection—establishes that these systems meet or exceed human performance for well-defined screening tasks.

However, regulatory convergence across the FDA, EMA, CIOMS, and the EU AI Act establishes non-negotiable requirements: risk-based validation, mandatory human oversight, transparent model development, continuous monitoring, and comprehensive documentation.

Key Takeaways:

- AI-assisted literature monitoring is regulatory-acceptable when properly validated.

- Human oversight remains mandatory—AI augments rather than replaces judgment.

- Validation must demonstrate sensitivity ≥95% with confidence intervals.

- Audit trails must reconstruct every screening decision.

- Continuous drift monitoring is now expected, not optional.

Organisations viewing pharmacovigilance literature monitoring AI as a productivity enhancement for expert professionals will successfully navigate validation while capturing efficiency benefits.

Common Questions and Answers

Does the TGA permit AI-assisted literature monitoring?

Yes, provided AI is used within a validated, well-controlled pharmacovigilance system that meets TGA requirements for comprehensive, timely, and auditable literature monitoring. The TGA does not prescribe or prohibit specific tools; leveraging EMA GVP Module VI as a reference, sponsors may use automation if they can demonstrate that AI-assisted processes are at least equivalent to manual methods in completeness, quality, and traceability.

What sensitivity should AI literature screening aim for?

Regulators do not mandate a numeric sensitivity threshold, but many pharmacovigilance teams target sensitivity of at least 95% during validation to minimise false negatives. These targets should be justified with statistically robust validation against gold-standard samples, including confidence intervals and ongoing performance monitoring in routine use.

How should human oversight integrate with AI screening workflows?

AI tools typically perform first-line screening, deduplication, and prioritisation, while trained safety staff remain responsible for final relevance decisions, case validation, and medical judgment. Effective designs use Human-on-the-Loop oversight to supervise automated screening and Human-in-the-Loop review for ICSRs and signals, ensuring accountability remains with qualified personnel.

What documentation must AI literature monitoring systems maintain?

Sponsors should maintain detailed records of search strategies, databases queried, search dates, hit lists, screening decisions, and links to any ICSRs or aggregate outputs generated. For AI components, documentation should also include model versions, training or configuration datasets, classification outputs with confidence scores, human overrides, and change-control records so inspectors can reconstruct how each decision was made.

How does the EU AI Act affect pharmacovigilance AI systems?

The EU AI Act, in force since August 2024, introduces mandatory requirements for high-risk AI systems, including risk management, data governance, transparency, human oversight, and logging. Pharmacovigilance teams must assess whether each AI system falls into a high-risk category (for example, when embedded in a regulated device or directly influencing patient-safety decisions) and, if so, implement the Act’s conformity assessment and lifecycle controls alongside existing GVP requirements.

What happens when AI model performance degrades over time?

AI models are susceptible to concept drift, where changing data patterns reduce accuracy if systems are not actively monitored and maintained. Sponsors should implement periodic performance checks against curated reference datasets, dashboards tracking sensitivity and specificity, and predefined thresholds that trigger investigation, retraining, rollback, or model replacement.

Can AI fully automate ICSR extraction from literature?

No, current practice is to use AI to pre-screen articles, identify potential cases, and populate draft data fields, with human experts validating all ICSRs and conducting medical assessment. Regulatory frameworks still require that qualified personnel make final decisions on case validity, seriousness, causality, expectedness, and reporting, so full end-to-end automation without meaningful human involvement is not acceptable.

How should AI-assisted literature monitoring be validated before routine use?

Validation should compare AI outputs against a gold-standard set of manually reviewed articles, quantifying sensitivity, specificity, and inter-reviewer agreement. The protocol, datasets, acceptance criteria, and results should be documented within the pharmacovigilance quality system, with periodic revalidation scheduled after major model changes or when performance monitoring indicates drift.

What governance is needed to manage AI risks in pharmacovigilance?

Organisations should integrate AI into their pharmacovigilance system master file and quality management system, defining roles, responsibilities, and decision rights for AI deployment. A cross-functional governance structure (PV, quality, IT, data protection, and, where applicable, EU AI Act compliance) should oversee risk assessment, model change control, incident management, and inspector-readiness for all AI-supported workflows.

How often should AI-assisted literature searches be run and reviewed?

EMA GVP Module VI requires at least weekly monitoring of medical literature for safety information, and TGA guidance similarly expects timely, systematic coverage. AI tools may enable more frequent or near-real-time screening, but sponsors must ensure that human review of relevant outputs occurs within timelines that support prompt ICSR reporting and signal detection obligations.

References

- European Medicines Agency (EMA) – Guideline on Good Pharmacovigilance Practices (GVP) Module VI – Management and reporting of adverse reactions to medicinal products (Rev 2)

- European Medicines Agency (EMA) – Good pharmacovigilance practices (GVP) – Overview page

- Therapeutic Goods Administration (TGA) – Pharmacovigilance responsibilities of medicine sponsors

- European Union – Regulation (EU) 2024/1689 – EU Artificial Intelligence Act: Article 6 – Classification rules for high‑risk AI systems

- European Union – Regulation (EU) 2024/1689 – EU Artificial Intelligence Act: Annex III – High‑risk AI systems referred to in Article 6(2)

- Therapeutic Goods Administration (TGA) – Artificial intelligence (AI) and medical device software regulation

Disclaimer

This article is provided for educational and informational purposes only. It is intended to support general understanding of regulatory concepts and good practice and does not constitute legal, regulatory, or professional advice.

Regulatory requirements, inspection expectations, and system obligations may vary based on jurisdiction, study design, technology, and organisational context. As such, the information presented here should not be relied upon as a substitute for project-specific assessment, validation, or regulatory decision-making.

For guidance tailored to your organisation, systems, or clinical programme, we recommend speaking directly with us or engaging another suitably qualified subject matter expert (SME) to assess your specific needs and risk profile.

oversight and regulatory readiness, GxPVigilance can help.