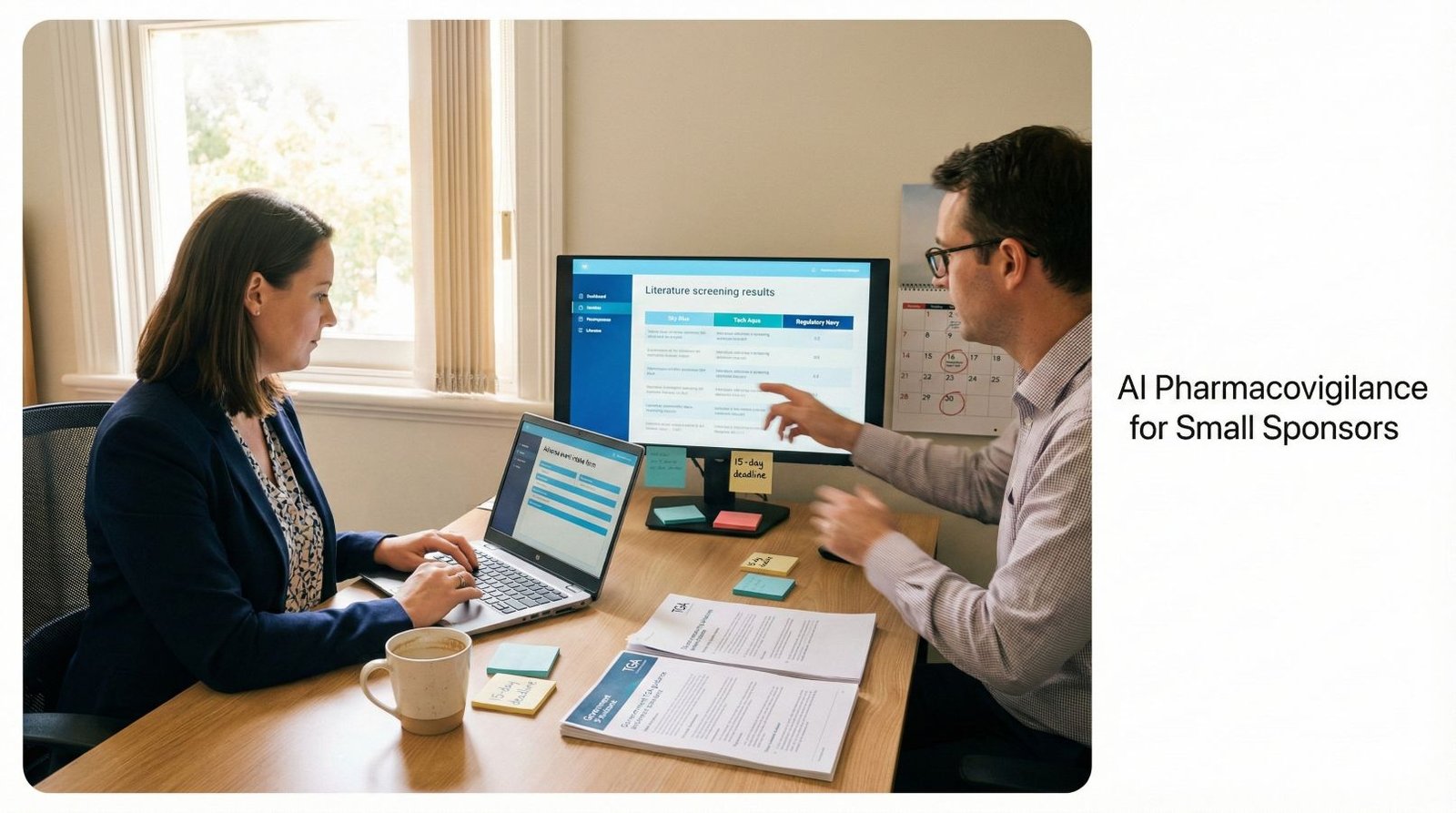

Small pharmaceutical sponsors face an unchanging regulatory reality: TGA pharmacovigilance requirements apply equally to multinationals processing 10,000 cases annually and biotech startups managing 50. The 15-day serious adverse reaction timeline doesn’t account for budget constraints. The 72-hour significant safety issue window doesn’t consider team size.

While large sponsors deploy validated enterprise platforms costing AUD $150,000-500,000 annually, SMEs operate with fractional QPPV support, shared email inboxes, and manual Excel tracking. This creates compliance risk through administrative bottlenecks, causing timeline breaches.

AI pharmacovigilance for small sponsors isn’t about enterprise platforms. It’s building lean workflows in which AI reduces the manual burden by 20-40% while humans retain full decision-making authority.

Your goal:

- Faster administration,

- Unchanged accountability,

- Sustained inspection readiness.

Regulators assess outcomes and oversight, not platform sophistication. TGA inspectors want evidence that you identified, assessed, and reported safety information on time with documented human review and audit trails. They don’t require expensive technology if your lean system produces compliant results.

Why Small Sponsors Face Disproportionate Pharmacovigilance Burden

Three specific pressures distinguish SME pharmacovigilance from enterprise operations:

- Work volume is not correlated with company size. A startup with three ARTG products monitors global literature, responds to spontaneous reports, prepares periodic updates, and maintains inspection documentation regardless of revenue. The work scales to regulatory obligation, not resources.

- Manual processes create timeline jeopardy. The 15-day reporting clock starts when anyone in your organisation receives minimum reportable information—identifiable patient, identifiable reporter, suspect medicine, adverse event. Three days in a shared inbox before QPPV routing consumes 20% of your compliance window.

- Unit economics favour large sponsors. Processing 100 cases at a platform cost of $150,000 equals $1,500 per case. Large sponsors processing 5,000 cases achieve $30 per case. This 50x cost disadvantage forces different architectural choices.

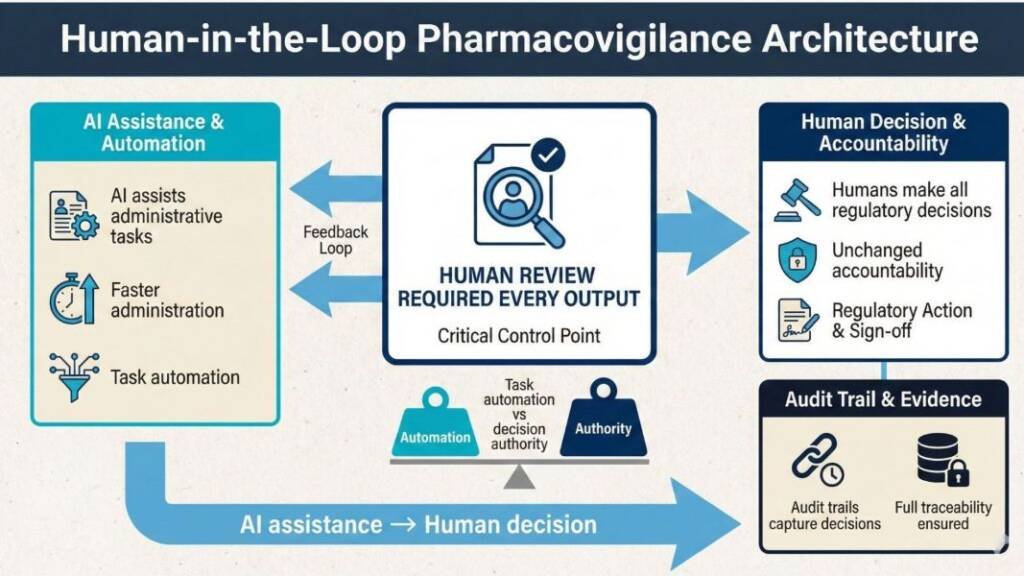

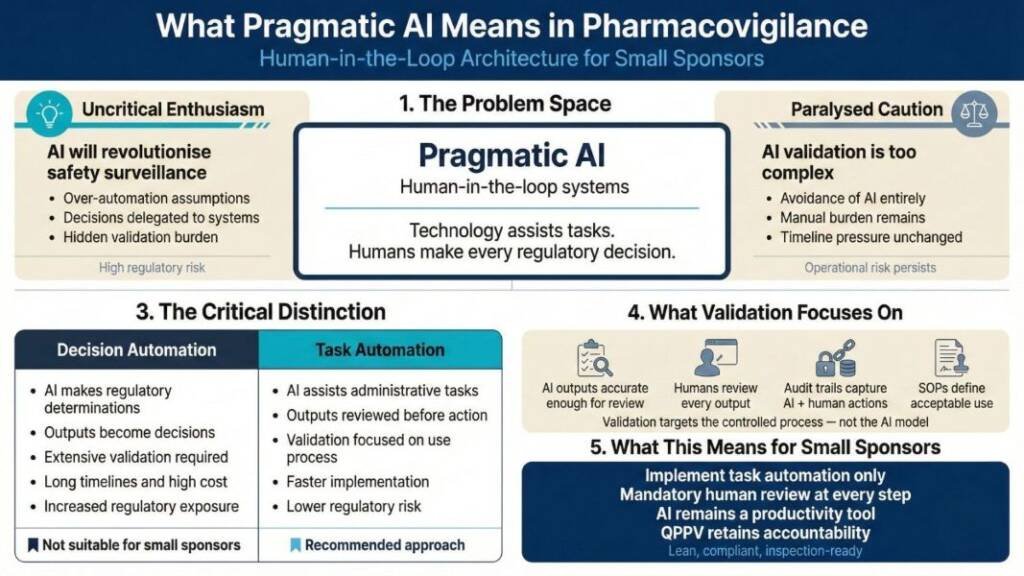

What Pragmatic AI Means: Human-in-the-Loop Architecture

Pharmaceutical AI discussions oscillate between uncritical enthusiasm (“AI will revolutionise safety surveillance”) and paralysed caution (“AI validation is too complex, time-consuming and expensive”). Neither serves small sponsors navigating resource constraints.

Pragmatic AI means human-in-the-loop systems in which technology assists with tasks while humans make every regulatory decision. This architectural choice fundamentally changes validation scope, implementation speed, and regulatory risk.

The critical distinction: Are you automating decisions or tasks?

Decision automation requires extensive validation because system outputs directly become regulatory determinations. AI independently classifying event seriousness and triggering TGA reports needs validation proving correct classification across diverse clinical scenarios—expensive, lengthy work requiring substantial documentation and testing.

Task automation reduces the validation burden because humans review every output before action. AI structuring email text into standardised intake fields needs validation to ensure accurate extraction—but human review catches errors before they reach regulatory submissions. The validation focuses on your controlled use process, not the AI’s internal algorithms.

Small sponsors should implement only task automation with mandatory human review. This keeps AI as a productivity tool, rather than a medical device that may require ARTG registration. It also maintains clear professional accountability—the QPPV remains responsible for regulatory decisions, using AI to reduce administrative burden rather than delegate judgment.

High-Value, Low-Risk AI Use Cases for SMEs

Creating High-Quality Training Material

Small teams struggle to maintain current, comprehensive training materials for pharmacovigilance staff. Writing SOPs, creating competency assessments, and developing role-specific guidance consume QPPV time that could be better spent on medical review.

Generative AI drafts training from approved documents. Experts review and finalise. AI organises and formats content.

Human control: Subject matter experts review all content for accuracy, add organisational context, verify regulatory references, and approve material before use.

Result: 40-60% faster training creation. Teams keep materials up to date, enabling structured onboarding.

Understanding Complex Regulatory Guidance Through Structured Prompting

Regulatory guidance is lengthy and requires product-specific analysis. Small teams struggle to review every update for relevance.

AI uses structured prompts to extract relevant guidance. Experts provide compliance questions; AI summarises and finds gaps. Humans validate findings and update processes.

Human control: QPPV or regulatory affairs lead reviews AI analysis against source documents, validates interpretation accuracy, determines applicability to a specific organisational context, makes final implementation decisions, and documents regulatory assessment.

Result: 30-50% faster regulatory analysis. Teams identify changes efficiently and can plan implementations sooner.

Literature Monitoring and Screening

TGA requires sponsors to screen 50-200 abstracts weekly from multiple databases.

AI screens abstracts using safety terms and product names, summarises events, and flags for review.

Human control: After AI flags articles, a qualified reviewer examines them, retrieves full texts, makes reportability assessments, and documents decisions.

Result: 40-60% faster screening, improved coverage through more frequent automated scans.

Case Narrative Drafting and Medical Terminology Coding

Narrative writing takes 45-90 minutes per case; coding requires MedDRA expertise.

AI drafts cases and suggests MedDRA terms based on event text, with all outputs reviewed by experts.

Human control: After the AI generates initial output, the medical reviewer edits for accuracy, adds clinical interpretation, and verifies against source documents before approval. The qualified coder then reviews AI suggestions and applies judgment for ambiguous cases.

Result: 20-40% faster narrative completion; 15-30% faster coding, especially for new staff.

Lean System Architectures SMEs Can Implement

- Lean SaaS + AI Upstream: Affordable cloud PV databases (AUD $15,000-40,000 annually) serve as a validated system of record, maintaining regulatory audit trails. Apply AI before data entry—intake triage, narrative drafting, literature screening. The database handles compliance; AI reduces manual work by feeding it. This separates your validated system (database) from productivity tools (AI), simplifying validation.

- Microsoft 365 Backbone: Configure existing Microsoft tools for pharmacovigilance. SharePoint provides document control. Power Automate handles routing and notifications. Lists track case status and deadlines. Controlled Copilot assists drafting within your secure tenant. No additional licensing beyond existing subscriptions.

- Minimal System + Fractional: Build a minimal tracking system in structured Excel with version control and access logging. Outsource QPPV oversight. Use general-purpose AI (ChatGPT Plus, Claude Pro) with clear SOPs that define use and require mandatory review. Works for very small sponsors (under 50 cases annually) needing maximum flexibility.

Critical principle: Architecture follows process clarity, not technology trends. Document workflows first—who does what, when, with what evidence. Then identify where AI reduces effort without removing accountability.

Risk-Based Validation for SME Reality

You validate intended use, not the entire AI model.

For AI task assistance with mandatory human review, validation demonstrates:

- Tool outputs are accurate enough for human review to catch errors.

- Humans review every output before action.

- Audit trails capture AI contribution and human decisions.

- SOPs define acceptable use and verification requirements.

Manageable validation for small teams:

- SOP updates documenting where AI is used, what it does, and who reviews

- User instructions showing correct use and verification

- Quarterly sampling where QPPV reviews random AI outputs against source documents

- Change control for tool switches or workflow modifications

You’re validating your controlled use process, not AI algorithms. Auditors expect control and evidence proportionate to risk.

Common Mistakes to Avoid

| Risk Area | Why This Creates Regulatory Risk |

|---|---|

| Shadow AI use | Staff using tools like ChatGPT without SOPs, oversight, or documentation creates uncontrolled processes. Every AI tool must have a defined scope, procedures, and audit trails. |

| Treating AI outputs as decisions | Submitting reports to the TGA solely based on AI flags (e.g., “serious”) automates regulatory decision-making without validation. |

| Missing audit trails | Auditors will ask how reportability was determined. Without records showing AI output, human review, and the final decision, sponsors cannot demonstrate control or compliance. |

| Automating required judgment | The TGA expects qualified professionals to assess causality, seriousness, and reportability. These medical and regulatory judgments cannot be delegated to AI systems. |

| Shared inboxes without logging. | The 15-day reporting clock starts when minimum reportable information is received. Without logged receipt timestamps, sponsors cannot evidence Day 0 during inspection. |

| Replacing oversight instead of supporting it | The common failure pattern is technology replacing expert oversight rather than supporting it. Lean AI means better tools in expert hands — not the replacement of expertise. |

Your Practical Next Steps

- Document current workflows. Map how adverse events arrive, who reviews them, what decisions get made, and what gets recorded. Identify manual tasks consuming disproportionate time.

- Select one pilot workflow. Literature screening or regulatory guidance analysis offers clear boundaries, low risk, and measurable savings.

- Implement with mandatory review. Configure your tool, document procedures in SOPs, require qualified reviewers to verify outputs, and maintain logs showing AI contribution and human decisions.

- Monitor and measure. Track time savings, error rates, and reviewer feedback. After 3-6 months, assess whether the approach works.

- Scale deliberately. Add AI to new workflows only after proving the approach, maintaining oversight, and keeping validation proportionate to risk.

Small sponsors can implement AI pharmacovigilance safely. Focus on human-in-the-loop task automation, risk-based validation, and lean architectures. You need clear processes, appropriate oversight, and documentation that proves you control the technology.

Pharmacovigilance maturity isn’t measured by platform sophistication. It’s measured by promptly identifying safety information, assessing it competently, reporting it accurately, and maintaining evidence that you did all three. Lean AI helps small sponsors achieve that standard without drowning in manual work.

Common Questions and Answers

What is lean AI pharmacovigilance for small pharmaceutical sponsors?

Lean AI pharmacovigilance for small sponsors means using human-in-the-loop automation to streamline drug safety tasks without building expensive, fully custom AI platforms. It focuses on simple, modular tools that reduce workload while staying compliant with TGA and ICH GVP expections.

Do small sponsors in Australia need a fully validated AI system to use AI in pharmacovigilance?

No, small sponsors using AI only for task support with mandatory human review validate the controlled workflow, not the underlying AI model. Full, extensive validation is only needed when AI makes autonomous regulatory decisions, which lean approaches deliberately avoid.

Is AI-assisted pharmacovigilance acceptable to the TGA and other regulators for SME sponsors?

Regulators will accept AI-assisted pharmacovigilance if qualified humans make final decisions, controls are clearly defined, and outcomes are reliable. Inspectors look for documented procedures, oversight, audit trails, and evidence that the AI-supported process works as intended.

Which pharmacovigilance tasks are best suited to lean AI and human-in-the-loop automation?

The best candidates are high-volume, repetitive tasks such as adverse event intake triage, email structuring, literature screening, and first-draft narrative writing. In these workflows AI prepares structured outputs that safety staff and the QPPV then review and finalise.

How can small sponsors use tools like ChatGPT, Claude, or Microsoft Copilot in pharmacovigilance without breaching data privacy?

Use enterprise or business versions under clear data processing agreements, and never enter directly identifiable patient data or commercially confidential information. Work with de-identified or limited datasets inside your secure M365 tenant or similar controlled environment, and document data flows and staff training.

How much workload reduction can small sponsors realistically achieve with lean AI pharmacovigilance systems?

Most small sponsors see around 20–40% time savings on intake processing, 40–60% on literature screening, and 20–40% on narrative drafting when workflows are well designed. Overall, this usually translates to a 20–30% reduction in total pharmacovigilance workload, freeing QPPV time for higher-value clinical assessment. This process should be planned and well executed. Immediate rewards are unlikely, it should be built into the system.

Do small sponsors need a data scientist or large vendor platform to implement AI pharmacovigilance automation?

No, lean AI pharmacovigilance for SMEs typically uses configurable general-purpose tools (such as ChatGPT, Claude, Copilot, or Power Automate) combined with strong pharmacovigilance expertise, not custom machine learning models. Data scientists are only required if you move into bespoke model development, which is usually unnecessary for small sponsors.

What documentation proves that AI-assisted pharmacovigilance workflows are inspection-ready for the TGA?

You should maintain SOPs defining where AI is used, validation or qualification evidence, audit trails showing AI outputs and human decisions, training records, and change control documentation. Together, these records demonstrate that your human-in-the-loop AI pharmacovigilance system is controlled, reliable, and compliant with TGA expectations.

References

- Therapeutic Goods Administration (TGA) – Pharmacovigilance responsibilities of medicine sponsors (Australian Government Department of Health and Aged Care, Version 3.0, 2026)

- Therapeutic Goods Administration (TGA) – Good Clinical Practice (GCP) Inspection Program 2023-2024 (Australian Government Department of Health and Aged Care, March 2025)

- International Council for Harmonisation (ICH) – Guideline for Good Clinical Practice E6(R3) (Final Version, Adopted 6 January 2025)

- International Council for Harmonisation (ICH) – Guideline on Good Pharmacovigilance Practices (GVP) Module VI (EMA Regulatory Procedural Guideline, Current Version)

- Council for International Organisations of Medical Sciences (CIOMS) – Artificial Intelligence in Pharmacovigilance: Report of Working Group XIV (Draft Report for Public Consultation, 1 May 2025)

- Council, Universities Australia, 2018)

- Therapeutic Goods Administration (TGA) – Clarifying and Strengthening the Regulation of Artificial Intelligence (AI) Consultation Paper (Australian Government Supporting Safe and Responsible AI Budget Measure, October 2024)

- European Medicines Agency (EMA) – Good Pharmacovigilance Practices (GVP) Guidelines (EU Regulatory Framework, Current Version)

- Food and Drug Administration (FDA) – Emerging Drug Safety Technology Program (EDSTP) (FDA Drug Safety Initiative, Established 2024)

- World Health Organisation (WHO) – Pharmacovigilance Indicators: A Practical Guide for Assessment (WHO Global Benchmarking Tool, 2015)

- International Council for Harmonisation (ICH) – Pharmacovigilance Planning E2E (ICH Harmonised Guideline, Current Version)

- European Medicines Agency (EMA) – Guideline on Safety and Efficacy Follow-up and Risk Management of Advanced Therapy Medicinal Products (Scientific Guideline for Emerging Therapies, Current Version)

Singh R, et al. (2025). “Reimagining drug regulation in the age of AI: a framework for AI-Enabled Therapeutics.” PubMed Central, PMC12571717.

Nagar A, et al. (2025). “Artificial intelligence in pharmacovigilance: advancing drug safety monitoring and regulatory integration.” PubMed Central, PMC12335403.

Disclaimer

This article is provided for educational and informational purposes only. It is intended to support general understanding of regulatory concepts and good practice and does not constitute legal, regulatory, or professional advice.

Regulatory requirements, inspection expectations, and system obligations may vary based on jurisdiction, study design, technology, and organisational context. As such, the information presented here should not be relied upon as a substitute for project-specific assessment, validation, or regulatory decision-making.

For guidance tailored to your organisation, systems, or clinical programme, we recommend speaking directly with us or engaging another suitably qualified subject matter expert (SME) to assess your specific needs and risk profile.