Introduction

When did your monitoring team last anticipate and prevent a protocol deviation?

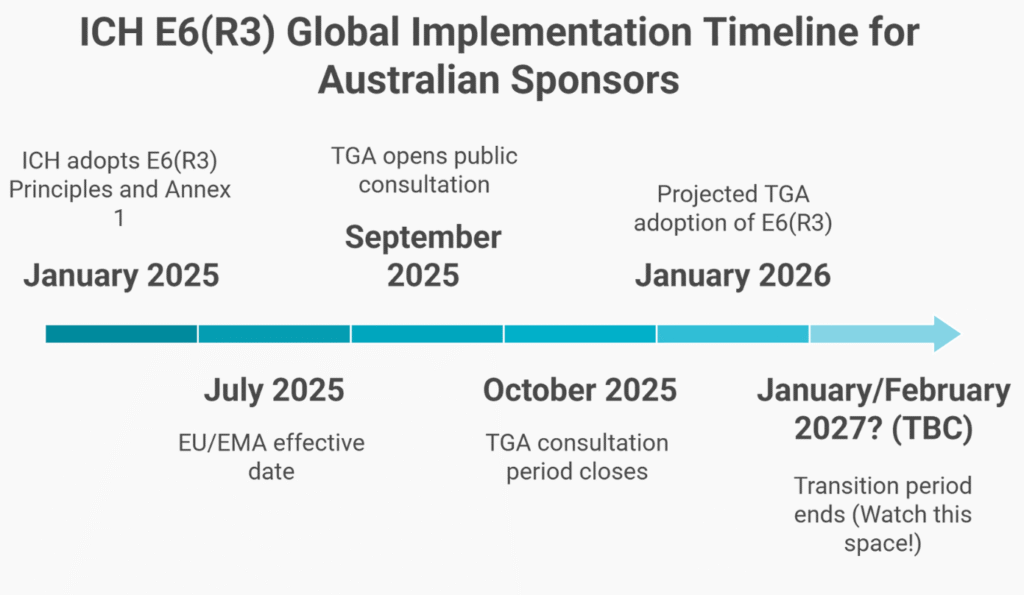

This question goes to the core of ICH E6(R3), which brings the biggest update to Good Clinical Practice (GCP) standards in almost ten years. On January 6, 2025, the International Council for Harmonisation (ICH) adopted E6(R3) Principles and Annex 1. This marks a major change from checking compliance after the fact to managing quality from the beginning.

The timeline is tight. The TGA is publishing Australian-specific annotations through Q3 2025, with full implementation projected for Q1 2026. More urgently, the European Medicines Agency has announced an effective date of July 23, 2025. If you’re running trials with EU sites, your compliance deadline is not 2026. It has already passed.

This guide explains the core changes in E6(R3), what they mean for Australian sponsors in practical terms, and provides implementation frameworks you can apply immediately.

The Regulatory Context

ICH E6 evolved from foundational GCP (E6[R1]), to risk-based but procedural guidance (E6[R2]), to the principle- and annex-driven E6(R3) restructuring.

E6(R3) now includes a Principles document and annexes: Annex 1 covers conventional trials; Annex 2 (in progress) will address decentralized approaches.

The rise of decentralized trials, wearables, and remote monitoring—mainstream post-COVID—demanded specific E6(R3) guidance.

Timeline

- January 6, 2025: ICH adoption (Step 4)

- July 23, 2025: EMA/EU implementation (effective immediately)

- TGA will release annotations by Q3 2025; implementation is projected for early 2026.

This staggered timeline means EU sites must comply by July 2025, while Australia has until early 2026—raising inspection risks if you use dual frameworks.

The Three Fundamental Shifts

1. Quality by Design Replaces Retrospective Checking

E6(R2) operated on detection: conduct the trial, monitor extensively, find errors, and correct them.

E6(R3) introduces prevention: identify what quality means for this trial before it starts, design controls that prevent errors in critical areas, and monitor proportionately based on risk.

The requirement: Sponsors must incorporate Quality-by-Design by identifying critical-to-quality (CtQ) factors and implementing proactive risk management.

What This Means

Before protocol finalization, conduct a structured CtQ assessment:

- Primary endpoint data: Critical—drives regulatory decisions

- Safety reporting: Critical—patient protection non-negotiable

- Eligibility criteria affecting safety: Critical

- Secondary endpoints supporting labeling: Moderate criticality

- Exploratory endpoints: Low criticality

- Administrative data: Minimal criticality

The monitoring plan flows from this assessment:

- Primary endpoints: Enhanced verification, potentially 100% remote monitoring

- Safety data: Immediate central review, risk-based site visits

- Eligibility: Prospective review before randomization

- Secondary endpoints: Statistical sampling based on risk signals

- Exploratory data: Centralized monitoring only

Why This Matters: Under E6(R2), sponsors considered 100% source data verification (SDV) to be ‘good practice.’ Under E6(R3), performing 100% SDV on low-risk data demonstrates poor quality management because you have not prioritized resources toward what matters.

2. Data Governance Becomes Mandatory

Section 4—Data Governance—didn’t exist in E6(R2). Now it’s mandatory and explicitly holds sponsors accountable for the entire data lifecycle, regardless of who operates the systems.

What This Includes

Data lifecycle:

- Capture methods defined before trial start

- Source data identification and controls

- Transfer protocols and validation

- Corrections are documented with audit trails.

- Database lock procedures

- Retention and destruction protocols

Computerized systems:

- Validation status verified by the sponsor.

- Audit trail completeness assessed.

- User access controls documented.

- Metadata management tracked

- System defects reported to the sponsor.

The Failing Scenario

Your CRO deploys a new patient-reported outcome platform. The vendor provides validation documentation. Your team signs off based on the CRO recommendation. During TGA inspection:

- “Show me your fitness-for-purpose assessment.”

- “Where’s your data governance plan?”

- “How did you verify metadata completeness?”

You don’t have answers. The CRO has documents, but the sponsor never established oversight.

Finding: Critical. The sponsor did not maintain ultimate accountability for data integrity.

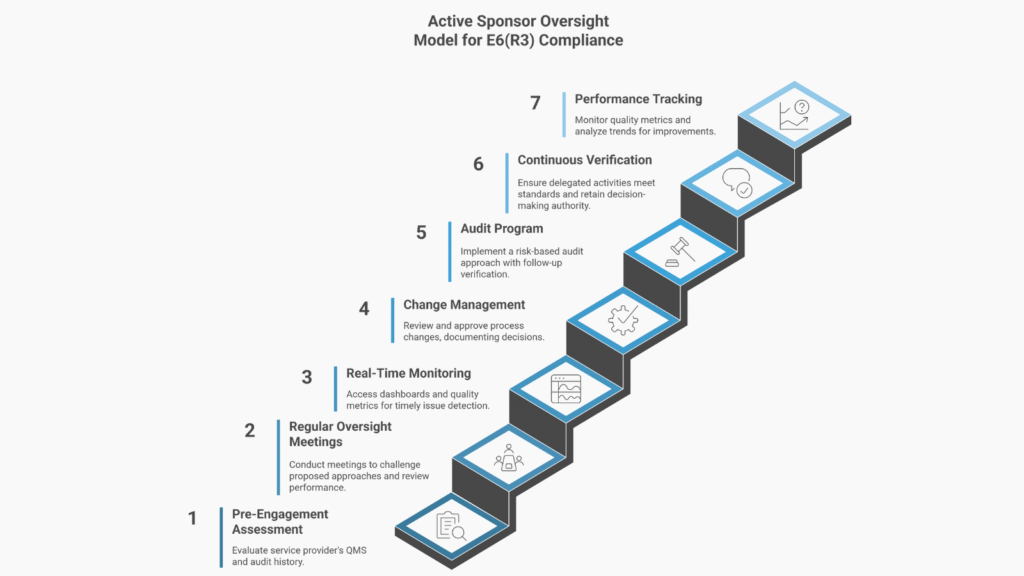

3. Sponsor Oversight Becomes Active

E6(R3) introduces “service providers,” replacing the CRO focus, and makes sponsors responsible for all parties performing trial activities: CROs, labs, imaging centers, mobile health vendors, and site management organizations.

The guideline states: “While sponsors may delegate duties to service providers, they retain ultimate accountability.”

What Active Oversight Requires

Before engagement:

- Service provider QMS assessment

- Certification and audit history verification

- Review of approaches against trial risk

- Selection rationale documentation

During engagement:

- Regular oversight meetings with documented challenges

- Real-time performance metrics access

- Review and approval of process changes

- Quality signal investigation before issues become systemic.

Throughout the lifecycle:

- Continuous verification that activities meet standards

- Documentation proving sponsor knowledge

- Evidence of sponsor decision-making

The old model—signing contracts, receiving reports, and escalating only when issues arise—shows passive oversight. E6(R3) expects you to demonstrate evidence of active governance.

Practical Implications for Australian Sponsors

QMS Transformation Required

You cannot simply revise your monitoring SOP because E6(R3) requires a shift from procedure-based compliance to principle-based quality management.

E6(R2) thinking:

- The monitoring SOP defines the visit frequency and the SDV percentage.

- Quality metrics track procedure compliance

- Training emphasizes following SOPs.

E6(R3) thinking:

- The Risk-Based Quality Management Framework defines CtQ methodology.

- Quality tolerance limits define acceptable variation.

- Monitoring plans are trial-specific, derived from risk.

- Training emphasizes risk-based decision-making.

You need to rewrite your QMS, not just update your SOPs. Allow 3-6 months for framework development, process redesign, training, and system updates. Vendor Agreements Need Overhaul

Every Master Service Agreement requires revision for:

Data governance:

- Who validates systems

- Who verifies fitness-for-purpose

- Audit trail management

- System defect reporting

Active oversight:

- Meeting cadence and documentation

- Performance metrics and reporting frequency

- Sponsor system access

- Escalation triggers and timeframes

Quality alignment:

- The service provider approach must align with the sponsor’s risk assessment.

- CtQ factors drive service provider activities.

- Quality tolerance limits are contractually defined.

The Global Compliance Challenge: Australian sponsors running global trials face an urgent choice: harmonize frameworks or create critical inspection risk now. Waiting is not an option; dual systems invite immediate regulatory scrutiny.

A Phase III trial starting January 2026 with Melbourne and Munich sites faces:

- Australian sites: Could operate under E6(R2) during transition

- EU sites: Must comply with E6(R3) from July 2025. If you postpone implementing E6(R3) until required by the TGA, your EU sites are instantly non-compliant. EMA inspections will uncover inadequate quality management, which can immediately affect submission timelines. Delay directly threatens your global trial success.

Strategic response: Implement E6(R3)-compliant systems by Q2 2025 if operating globally.

What Auditors Will Look For

E6(R2) Inspector Questions (Old)

- “Show me monitoring visit reports.”

- “What percentage of source data was verified?”

- “Are procedures being followed?”

E6(R3) Inspector Questions (New)

- “How did you identify critical-to-quality factors?”

- “Show me the risk assessment justifying your monitoring approach.”

- “How did you verify your CRO’s plan aligned with your CtQ factors?”

- “Where’s your data governance plan for the mobile app?”

- “How do you demonstrate that your oversight was effective, not just procedural?”

The evidence shift:

Before: Compliance = documentation that procedures were followed

After: Compliance = documentation that quality was designed, controlled, and achieved

Before: Compliance = documentation that procedures were followed

After: Compliance = documentation that quality was designed, controlled, and achieved

Conclusion: The 2026 Quality Cliff

ICH E6(R3) represents the most significant shift in clinical trial quality management since GCP was first harmonized in 1996. For Australian sponsors:

- EU sites must comply by July 2025 (already effective)

- TGA implementation projected for Q1 2026 (budget decisions now)

- Global trials require a unified approach.

- Implementation takes a minimum of 6-12 months.

December 2025 marks your budget decision point. If you do not allocate resources for QMS transformation, vendor agreement overhaul, data governance infrastructure, and competency development, you will enter 2026 unprepared.

Organizations that succeed recognize this is not just another guideline update—it is a fundamental reset. They invest in capability development, not just documentation updates, and they build genuine risk-based thinking into their culture.

The question is not whether to implement E6(R3), but whether you implement it strategically now or reactively after inspection findings.

Frequently Asked Questions

Does E6(R3) apply to all Australian clinical trials?

E6(R3) applies to interventional trials of investigational products intended for regulatory submission. Once TGA adopts E6(R3), compliance becomes a condition of approval under CTN and CTA schemes. New trials starting in 2026 will require E6(R3) compliance from the initiation stage.

What’s the biggest implementation challenge?

The biggest challenge is the cultural shift from procedure-following to risk-based thinking. Staff have operated under a “follow the SOP” mindset for years. Now, E6(R3) requires you to conduct risk assessments, design proportionate controls, and defend decisions during inspection. You cannot achieve this through “read and understand” training alone—it requires competency development and practical application.

How do we handle service providers who aren’t E6(R3) ready?

You should work with providers to bring them up to standard before engagement, provide enhanced sponsor oversight to compensate for gaps, or select alternative providers. What you cannot do is delegate and ignore the issue. You retain ultimate accountability regardless of service provider gaps.

What happens if we’re not compliant with the implementation?

Trials starting after implementation must demonstrate E6(R3) compliance from the outset. If you are not compliant, you risk approval delays, inspection findings that require mid-study remediation, data facing regulatory scrutiny, and a competitive disadvantage compared to sponsors who moved earlier.