Documentation as a Core GMP Control

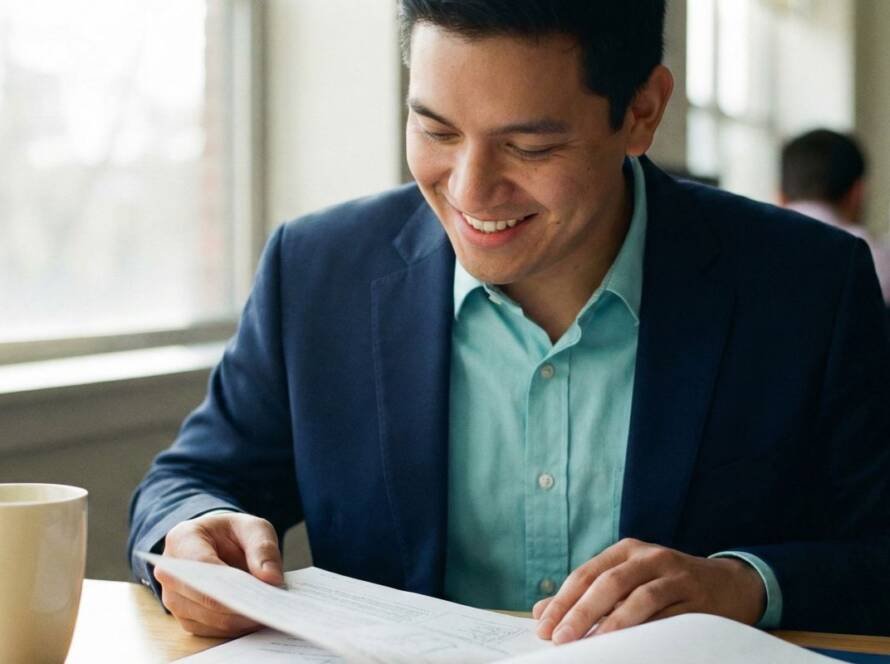

Pharmaceutical documentation defines how products are made, tested, and released. Standard operating procedures, validation protocols, and quality manuals establish accountability across the product lifecycle. They are not administrative overhead—they are regulatory requirements that protect patient safety.

Yet the documentation workload has grown beyond many teams’ capacity. Global regulatory requirements, frequent guidance updates, expanding product portfolios, and the need for consistency across multiple sites have made maintaining controlled documents an operational burden that diverts resources from higher-value quality activities.

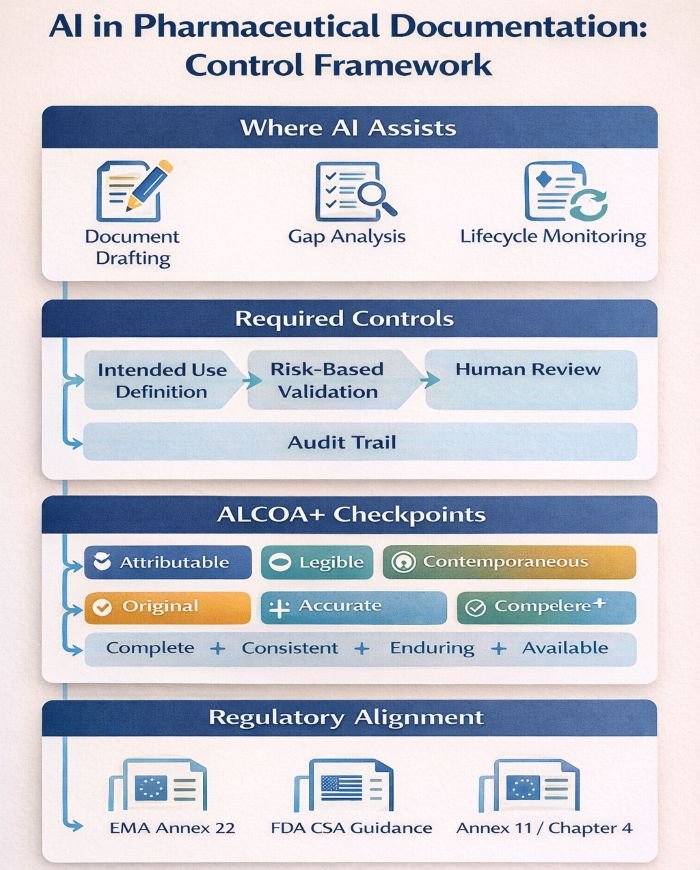

AI in pharmaceutical documentation offers a practical response to this pressure. Generative models can assist with drafting, gap analysis, and lifecycle monitoring—provided they operate within defined controls. The EU GMP Annex 22 and FDA’s 2025 AI guidance now establish clear parameters for this integration, making AI a legitimate tool within the Pharmaceutical Quality System when properly governed.

This article explains how to embed AI in documentation practices while maintaining GMP compliance, human accountability, and audit readiness.

What Does “Embedding AI” Mean in a GxP Environment?

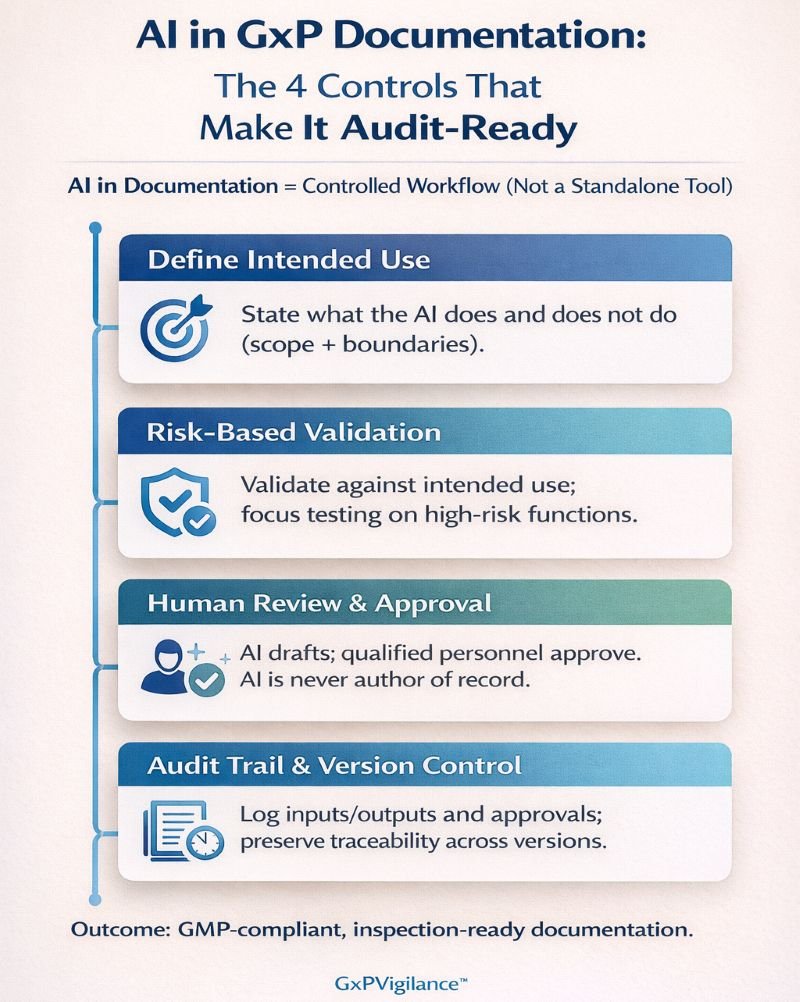

Embedding AI means integrating AI capabilities into existing documentation workflows as a controlled, validated component—not as a standalone tool operating outside quality systems.

AI functions as a supportive assistant. It drafts content, identifies gaps, and flags documents requiring an update. It does not author final procedures, make release decisions, or replace human judgment on regulatory matters.

This distinction matters because regulators assess AI through the same lens as any GxP process: defined intended use, documented controls, human accountability, and traceable records. AI embedded within your quality system inherits the governance that already governs your documentation practices.

Good Documentation Practice (GDP) principles apply directly. AI outputs require the same review, approval, and version control as manually authored content. The Pharmaceutical Quality System (PQS) provides the governance framework; AI is simply another element within it.

Infographic showing an AI control framework for pharmaceutical documentation with required controls, ALCOA+ checkpoints, and regulatory alignment (EMA Annex 22, FDA CSA, Annex 11/Chapter 4).

Potential Perspective on what Regulators Expect for AI in Documentation?

Regulators are converging on a consistent position: AI may support GxP activities, but responsibility and decision-making remain with qualified personnel, and the system must be governed like any other computerised process—with defined intended use, risk-based assurance, change control, and auditability.

EU Context: Annex 22, Annex 11, and Chapter 4

The European Commission’s draft EU GMP Annex 22: Artificial Intelligence is positioned as additional guidance to Annex 11 for computerised systems where AI models are used in critical manufacturing applications (i.e., with direct impact on patient safety, product quality, or data integrity).

The draft places strong emphasis on control of the model lifecycle (selection, training, validation, monitoring, change control) and on ensuring human review where needed. Importantly, the draft Annex 22 focuses on static AI models (those that do not change behaviour after training), and it sets a conservative boundary for high-criticality use cases that must remain deterministic and tightly controlled.

For documentation specifically, the more directly applicable EU anchors remain:

Chapter 4 (Documentation)

Annex 11 (Computerised Systems)

These govern document controls, audit trails, data integrity, and traceable processes. Where AI is used to draft or maintain controlled documentation, AI outputs are treated as draft inputs that only become controlled records through established GDP and PQS controls: review, approval, versioning, and traceable accountability.

US Context: FDA AI Guidance and CSA

FDA’s draft guidance, “Considerations for the Use of Artificial Intelligence To Support Regulatory Decision-Making for Drug and Biological Products,” sets expectations for when AI is used to produce information intended to support regulatory decision-making (safety, effectiveness, or quality). The through-line is transparency of purpose, fit-for-purpose performance, and documentation of the approach and limitations appropriate to risk.

Separately, FDA’s Computer Software Assurance (CSA) guidance reflects a broader reality in inspections: assurance activities should be risk-based and focused on intended use and critical functions, rather than exhaustive testing of every feature. Note: CSA is issued in a medical device context, but its risk-based principles are frequently adopted as good practice when justifying assurance efforts for regulated software. Also note that the FDA has continued to update CSA publications, so teams should cite the specific version used in their rationale.

Practical regulatory “bottom line” for documentation AI

- Define intended use (what the AI does—and explicitly does not do).

- Classify risk/criticality by impact (could an AI error influence GMP decisions or data integrity?).

- Maintain human accountability (AI is never the author/approver of record).

- Ensure traceability (inputs, outputs, reviewers, approvals, versions, and model changes are auditable).

- Control change (model/version updates trigger impact assessment and documented assurance activities).

AI supports the documentation lifecycle in five high-value ways:

- Drafting & authoring: Generates structured first drafts from approved templates and standard terminology, flags vague phrases, and lets SMEs focus on technical accuracy.

- Regulatory alignment (RAG): Cross-references drafts against authoritative sources (FDA/EMA/ICH and internal policies) to identify gaps and produce traceable gap reports for compliance review.

- Standardisation & structural control: Enforces mandatory sections, consistent terminology, and approved metadata to reduce document inconsistency across sites—an audit pain point.

- Review, training & usability: Creates summaries, checklists, and job aids to improve understanding without changing approved procedures.

- Lifecycle management & change control: Monitors regulatory updates and flags impacted SOPs, pinpointing sections requiring revision to ensure proactive compliance.

What Validation Approach Do AI Systems Require?

- Define intended use. Document precisely what the AI will do within your documentation process. Intended use statements should be specific: “AI tool X generates initial SOP drafts for non-critical procedures, subject to SME review per SOP-123.”

- Demonstrate fit-for-purpose. Annex 22 requires evidence that AI performance meets or exceeds the manual process it supports. This means measuring current baseline performance—error rates, cycle times, consistency—then demonstrating AI outputs achieve equivalent or better results.

- Apply risk-based testing. FDA CSA guidance supports a focused validation effort on high-risk functions. For documentation AI, critical test scenarios include handling complex regulatory requirements, maintaining content accuracy, and ensuring failures are detected during human review.

- Establish change control. AI model updates—whether vendor-supplied or internally developed—require a documented impact assessment. Treat AI providers as critical suppliers, with qualification dossiers that include model version, training data, known limitations, and internal qualification evidence.

AI Documentation Validation Checklist

- Intended use defined and documented

- Baseline manual process performance measured.

- Test scenarios covering critical content requirements

- Human review effectiveness verified.

- Vendor qualification completed (if applicable)

- Change control procedure established for model updates

- Performance monitoring metrics defined.

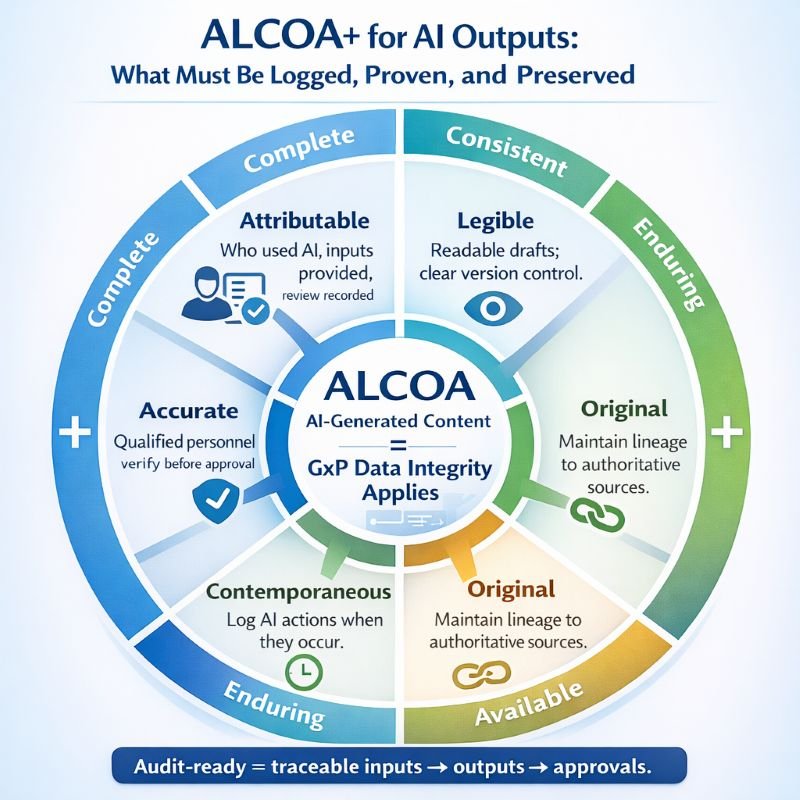

How Do ALCOA+ Principles Apply to AI-Generated Content?

- Attributable requires audit trails that record who initiated AI use, the inputs provided, and how outputs were reviewed. The system must log AI interventions with user identification and timestamps.

- Legible requires AI-generated content to be human-readable and supported by clear version control. Draft outputs must remain distinguishable from approved content during the review process.

- Contemporaneous requires real-time logging of AI interactions. Records must reflect when AI assistance occurred, not when it was later documented.

- Original requires maintaining source data integrity and documenting lineage from authoritative references. RAG systems must identify which sources informed each output.

- Accurate requires verification by qualified personnel before implementation. Human reviewers must confirm content correctness before AI-generated material enters the controlled document system.

- The extended ALCOA+ attributes—Complete, Consistent, Enduring, and Available—ensure comprehensive documentation, standardized processes, long-term archival, and controlled access throughout the AI-assisted documentation lifecycle.

What Governance Framework Supports AI in Documentation?

- Establish AI usage policies and SOPs. Define which AI tools are approved, for what purposes, and under what controls. Policies should specify approval authority, prohibited uses, and documentation requirements for AI-assisted activities.

- Define roles and responsibilities. Clarify who may initiate AI use, who reviews outputs, and who approves final documents. Accountability must be unambiguous; AI should never be listed as the author of record.

- Implement training and competency requirements. Personnel must understand both AI capabilities and limitations. Training should emphasize critical review skills and the essential requirement for professional judgment.

- Complete vendor qualification. Assess AI providers against GAMP 5 principles, with particular attention to model transparency, change notification procedures, and data governance assurances.

What Are the Benefits and Limitations of Embedded AI?

- Benefits. When properly embedded, AI in pharmaceutical documentation delivers measurable improvements, including 30–40% reductions in document revision cycles, 25% fewer procedural errors, and improved inspection readiness. AI identifies inconsistencies that human authors may miss and supports timely updates when regulations change.

- Limitations. Over-reliance remains a risk. AI outputs require the same critical review as manually authored content. Data quality directly affects AI performance; outdated training data produces outdated outputs.

- Explainability gaps may complicate regulatory inspection responses.

- Mitigation. Governance controls such as human review requirements, audit trails, validation evidence, and vendor qualification address these limitations systematically. The goal is controlled augmentation, not autonomous operation.

Future Outlook

Annexe 22’s conservative approach, which restricts generative AI to non-critical applications, reflects current regulatory caution. As the industry demonstrates effective control over AI systems, future guidance revisions may expand permissible applications.

The trajectory is clear: AI will become a permanent, governed component of pharmaceutical quality systems. Organisations that establish robust governance frameworks now will be positioned to adopt expanded capabilities as regulatory confidence matures.

Conclusion

Embedding AI into pharmaceutical documentation can strengthen compliance when properly controlled. AI assists with drafting, gap analysis, standardisation, and lifecycle monitoring. These activities often consume significant QA resources without adding proportionate value.

The regulatory framework now exists. EMA Annex 22, FDA guidance, and established principles from Annex 11 and Chapter 4 define the boundaries. Within those boundaries, AI in pharmaceutical documentation serves as a validated tool that supports human decision-making.

Common Questions and Answers

Can AI author final SOPs under GMP requirements?

No. AI may help draft, but final authorship and approval of controlled GMP documents must remain with qualified humans, with AI treated only as a supporting tool.

Does EMA Annex 22 apply to Australian pharmaceutical operations?

Annex 22 applies directly to sites under EU GMP or supplying the EU; in Australia, it is not mandated but is closely aligned with TGA/PIC/S expectations and is useful as a governance benchmark, while EU-supplying sites must comply.

What validation evidence do inspectors expect for AI documentation tools?

They expect defined intended use, risk assessment, fit-for-purpose validation or qualification, change-control records, and audit trails showing human review and version-controlled documents and models, in line with Annex 11/Part 11.

How do we maintain ALCOA+ compliance when AI generates content?

Log AI use (user, time, inputs, outputs), maintain version control to separate drafts from approved documents, clearly identify human reviewers/approvers, and, for RAG, document source repositories to preserve traceability.

What distinguishes critical from non-critical AI applications?

Critical AI directly affects patient safety, product quality, or data integrity (e.g. release or process-control decisions); non-critical AI only supports human work (e.g. drafting, summarising), and draft Annex 22 restricts generative AI to such non-critical, human-supervised uses.

Can AI replace human review of controlled documents?

No. AI may assist drafting and checks, but qualified personnel must still review and approve controlled documents, and current EU/FDA expectations do not allow delegating that accountability to AI.

What governance documents should we establish before implementing AI in documentation?

Have an AI usage policy, an SOP for AI-assisted documentation, a validation/qualification plan, vendor qualification records, and training records to show controlled, risk-based implementation during inspections.

References

- European Commission – EudraLex Volume 4: EU Guidelines for Good Manufacturing Practice for Medicinal Products for Human and Veterinary Use

- European Commission – EU Guidelines for Good Manufacturing Practice for Medicinal Products for Human and Veterinary Use, Part I, Chapter 4: Documentation

GxP Vigilance – AI Policy: Responsible Local AI Use at GxPVigilance

GxP Vigilance – AI Governance & Strategy (Service framework aligning AI governance with QMS, CSV/GAMP 5, data integrity, and regulatory expectations for GMP/GCP/GVP operations)

- European Commission / EMA – Draft EU GMP Annex 22: Artificial Intelligence

- U.S. Food and Drug Administration (FDA) – Considerations for the Use of Artificial Intelligence To Support Regulatory Decision-Making for Drug and Biological Products (Draft Guidance for Industry, 2025)

- U.S. Food and Drug Administration (FDA) – Computer Software Assurance for Production and Quality System Software

- U.K. Medicines and Healthcare products Regulatory Agency (MHRA) – GXP Data Integrity Guidance and Definitions

- World Health Organization (WHO) – WHO Technical Report Series 996, Annex 5: Guidance on Good Data and Record Management Practices

Disclaimer

This article is provided for educational and informational purposes only. It is intended to support general understanding of regulatory concepts and good practice and does not constitute legal, regulatory, or professional advice.

Regulatory requirements, inspection expectations, and system obligations may vary based on jurisdiction, study design, technology, and organisational context. As such, the information presented here should not be relied upon as a substitute for project-specific assessment, validation, or regulatory decision-making.

We have no commercial relationship with some of the entities, vendors, or software referenced. Any examples are illustrative only, and usage may vary by organisation and their needs.

For guidance tailored to your organisation, systems, or clinical programme, we recommend speaking directly with us or engaging another suitably qualified subject matter expert (SME) to assess your specific needs and risk profile.