Remember when your team spent three days manually processing a single complex adverse event report? That reality is rapidly changing. As of 2025, leading pharmaceutical companies process millions of adverse events annually through AI-augmented systems, achieving 30-70% efficiency improvements while navigating newly clarified regulatory frameworks from the FDA, EMA, TGA, and CIOMS.

The question for pharmacovigilance managers is no longer whether to implement AI in drug safety operations, but how to do so in a compliant, validated manner that maintains patient safety.

You’ll learn: Current AI maturity across PV workflows, 2025 regulatory validation requirements from FDA/EMA/CIOMS/TGA, production deployment results from Sanofi and Pfizer, human oversight models (HITL/HOTL/HIC), and a step-by-step implementation guide for pharmaceutical companies.

Regulatory Frameworks Converge on Risk-Based Validation

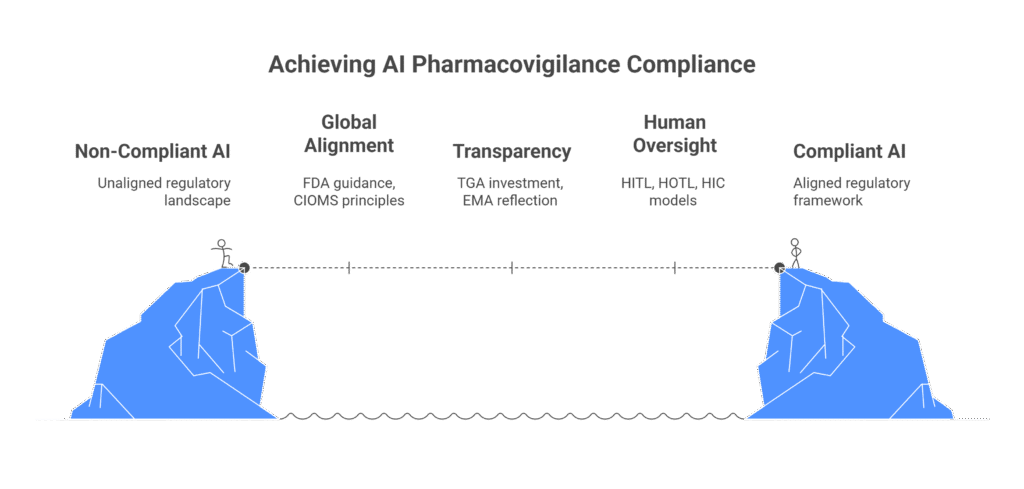

The 2025 regulatory environment reflects unprecedented international alignment on AI in pharmacovigilance. Four major regulatory bodies published comprehensive guidance within 18 months, creating clear implementation pathways.

NEW GUIDANCE: FDA January 2025 AI Framework

The FDA issued a draft guidance in January 2025, titled ‘Considerations for the Use of Artificial Intelligence to Support Regulatory Decision-Making for Drug and Biological Products,’ which establishes a seven-step risk-based credibility assessment framework. This framework provides recommendations for AI applications in drug development and post-market surveillance, but it is not mandatory, as FDA guidance documents generally describe the Agency’s current thinking and are not legally enforceable.

The guidance, which cites a paper on over 300 AI submissions since 2016, recommends that all performance estimates include statistical confidence ranges. Additionally, the FDA introduced the Emerging Drug Safety Technology Program (EDSTP) as a voluntary dialogue pathway for sponsors implementing AI in pharmacovigilance.

CIOMS Working Group XIV: Seven Core Principles

CIOMS published draft guidance in May 2025 articulating the global framework: risk-based approach, human oversight, validity and robustness, transparency, data privacy, fairness and equity, and governance and accountability. The document provides the most detailed practical guidance on human oversight models, distinguishing between human-in-the-loop (HITL), human-on-the-loop (HOTL), and human-in-command (HIC) approaches.

CIOMS explicitly warns that “misguided automation efforts focused exclusively on efficiency and financial targets risk eliminating the value added by humans in the loop and could ultimately compromise the unique strength of the pharmacovigilance system.”

TGA and EMA Alignment

Australia’s TGA published consultation outcomes in July 2025, identifying urgent regulatory gaps around adaptive AI and open datasets. The TGA allocated $39.9 million over five years for Safe and Responsible AI initiatives and signalled increased compliance enforcement for 2025-2026, particularly targeting companies whose AI tools may qualify as regulated medical devices.

The EMA’s September 2024 Reflection Paper aligns with the EU AI Act (effective August 1, 2024) and issued its first AI qualification opinion in March 2025 for the AIM-NASH tool, demonstrating regulatory acceptance of AI-assisted data when properly validated.

Five Non-Negotiable Requirements

All four regulatory bodies converge on:

- Risk-based validation proportionate to model influence and decision consequence

- Mandatory human oversight with documented HITL/HOTL/HIC approaches

- Data quality and provenance as foundational requirements

- Life cycle maintenance beyond one-time validation

- Transparency with accountability throughout the AI system lifecycle

The FDA requires evaluation methods to “consider the performance of the human-AI team, rather than just the performance of the model in isolation,” fundamentally shifting validation from assessing AI alone to assessing combined human-AI performance.

Production Systems Deliver Measurable Outcomes

Sanofi’s Project ARTEMIS

Sanofi processes over 700,000 adverse event reports annually through AI-augmented systems deployed with IQVIA. Launched in 2019, ARTEMIS achieved “substantial cost savings” while enhancing consistency. Over 120,000 cases per year flow through automated systems, representing more than 15% of global intake as of 2021, with the proportion continuing to increase.

ArisGlobal’s Largest Volume Deployment

ArisGlobal’s production system deployed in April 2025 delivered 80% faster signal assessment by safety physicians and nearly 50% reduction in false positive signals during detection. The implementation enables earlier identification of genuine safety issues for proactive risk management.

Quantified Industry Benefits

Leading pharmaceutical implementations demonstrate:

- Time savings: 50-80% reduction in case processing

- Accuracy improvements: 90-95% detection rates versus 60-70% traditional methods

- Efficiency gains: 70% automation of report content assembly

- Cost reductions: Industry-wide savings potential

Pfizer’s 2019 vendor evaluation demonstrated that AI achieved F1 scores exceeding internal benchmarks within just two training cycles, with systems training solely on adverse event database content rather than requiring costly source document annotations.

Human Oversight Models Define Workflow Integration

CIOMS Working Group XIV articulates three distinct oversight models pharmaceutical companies are implementing:

Human-in-the-Loop (HITL)

Required for high-risk PV decisions including causality assessment, case validity determination, and seriousness evaluation. AI provides recommendations but humans make final decisions. Validation must measure combined human-AI system performance versus human-only baselines.

Human-on-the-Loop (HOTL)

Suits medium-risk, high-volume tasks like duplicate detection, auto-coding, and data extraction. AI processes cases automatically with humans reviewing samples or exceptions. Organizations typically require human validation for any case where AI confidence scores below 80%.

Human-in-command (HIC)

Applies to low-risk, routine tasks like intake logging and report formatting. AI operates autonomously while humans monitor aggregate performance through periodic audits. This model remains rare in 2025 due to regulatory caution.

Practical Workflow Mapping

- Case intake/processing: HOTL with quality scoring

- Medical coding: HITL evolving to HOTL with high confidence thresholds

- Case validity assessment: Mandatory HITL (regulatory requirement)

- Causality assessment: Mandatory HITL (requires medical judgment)

- Signal detection: HIC with human validation and clinical evaluation

- Regulatory reporting: Mandatory HITL (legal requirement)

Implementation Challenges Require Proactive Mitigation

Data Quality and Standardization

With 94% median underreporting rate in spontaneous reporting systems and fragmented sources (EHRs, social media, literature, claims data), data quality remains the top challenge. Solutions include implementing common data models like OMOP CDM, using MedDRA for adverse events and RxNorm for drug coding, deploying federated learning approaches, and establishing data governance frameworks.

Algorithmic Bias

AI models trained on limited populations perform poorly for diverse patient groups. Solutions include data augmentation, quantitative bias detection tools, federated learning training, fairness-aware algorithms, and regular bias auditing across demographic subgroups.

Validation and Monitoring

Best practices include pre-deployment validation through gold-standard benchmark datasets, cross-validation with sensitivity analysis, continuous monitoring with real-time performance dashboards, and periodic revalidation with formal documentation per GAMP 5 principles.

Workforce Transformation Creates Hybrid Roles

The PV workforce is evolving rather than shrinking. Case processors transition from manual data entry to validating AI outputs and training systems through feedback loops. Medical reviewers shift from manual causality assessment to interpreting AI-generated risk assessments and making final adjudication decisions.

New specialised roles include AI-driven safety specialists, PV innovation specialists bridging drug safety and data science teams, AI validators ensuring regulatory compliance, PV data scientists developing AI-enhanced methodologies, and AI compliance officers overseeing governance frameworks.

Core competencies required include understanding AI/ML/NLP fundamentals conceptually, knowledge of AI validation and performance monitoring, understanding of data quality and bias issues, and ability to interact with AI-enhanced safety databases. Traditional PV competencies remain essential—pharmacology, regulatory requirements, medical coding, and causality assessment.

Strategic Implementation Pathway for Australian Companies

Start with Clear Business Cases

Quantify expected benefits—processing time reduction, earlier signal detection, cost savings—while calculating ROI including technology costs, validation effort, and ongoing maintenance. Identify high-value use cases in case intake, coding, or literature screening with pilots running 3-6 months.

Establish Robust Data Governance

Create committees with cross-functional representation, define data ownership and access controls, break down data silos, implement data quality monitoring before AI deployment, and ensure GDPR, HIPAA, and privacy regulation compliance.

Pilot Before Scaling

Begin with well-defined limited scope projects, define clear success metrics, use pilots to refine processes and build organizational confidence, document lessons learned, and expand incrementally to additional use cases.

Australia-Specific Considerations

Engage with TGA early given increased compliance enforcement for 2025-2026. Clarify whether AI systems qualify as regulated medical devices. Ensure inclusion in the Australian Register of Therapeutic Goods, if required. Comply with TGA’s urgent recommendations on adaptive AI and open datasets. Document data governance addressing TGA’s provenance concerns. Align with TGA’s $39.9 million Safe and Responsible AI initiative expectations.

Conclusion: The Path Forward

The regulatory landscape has matured, enabling confident AI deployment in pharmacovigilance. Production systems demonstrate compelling benefits with 50-80% efficiency improvements while maintaining safety. Clear frameworks exist for validation, documentation, and human oversight. Australian pharmaceutical companies that strategically implement AI with robust governance while preserving patient safety as their primary concern will realise substantial operational advantages through 2025 and beyond.

The opportunity is clear: organisations beginning implementation today can achieve measurable benefits within 6-12 months while building capabilities for increasingly sophisticated applications. The risk of inaction is equally clear: competitors implementing AI will operate with dramatically lower costs, faster signal detection, and stronger regulatory compliance positions.

What Auditors Look For: Inspection Readiness

Critical documentation expectations:

- Updated Pharmacovigilance System Master Files reflecting AI/ML operations

- Validation documentation with confidence intervals (FDA mandatory)

- Change control procedures for model updates

- Credibility assessment plans and reports available upon inspection request

- Risk assessments for each AI system

- Data provenance documentation proving quality and representativeness

- Validation reports demonstrating performance across diverse populations

- Human oversight procedures with training records

- Comprehensive audit trails showing AI contribution to safety decisions

Top 10 inspection risk areas:

- Missing confidence intervals on performance metrics

- No documented risk assessment

- Unknown data provenance (TGA urgent flag)

- Inadequate bias assessment across demographic subgroups

- No demonstration of test data independence from training data

- SOPs not updated to reflect AI use

- PSMF not including AI systems

- Insufficient audit trails for AI decisions

- Inadequate documentation of human oversight qualifications

- Lack of life cycle maintenance plans addressing model drift

References

- FDA. Draft Guidance: Considerations for the Use of Artificial Intelligence To Support Regulatory Decision-Making for Drug and Biological Products. January 2025.

- CIOMS Working Group XIV. Artificial Intelligence in Pharmacovigilance. Draft Report for Public Consultation. May 2025.

- TGA. AI Review: Outcomes Report. July 2025.

- EMA. Reflection Paper on the Use of Artificial Intelligence in Drug Development. September 2024.

- IQVIA. AI Trends in Pharma: Enhancing Drug Safety and Regulatory Compliance for 2025. January 2025.

- PubMed Central. Artificial Intelligence in Pharmacovigilance: Advancing Drug Safety Monitoring and Regulatory Integration. PMC12317250. 2025.

- Research and Markets. Pharmacovigilance & Drug Safety Software Market Size Analysis. 2025.

Frequently Asked Questions

Case intake automation (40-60% automation achieved), medical coding (near-human accuracy for common terms), and duplicate detection are mature applications. Case processing shows 50-70% automation potential for structured data. Signal detection is mature for traditional methods and emerging for advanced approaches. Narrative writing remains pilot stage, requiring significant human review.

FDA requires credibility assessment reports evaluating seven dimensions: model relevance, measurement accuracy, data quality, data representativeness, risk assessment, robustness, and uncertainty quantification. All performance estimates must include confidence intervals. Validation must assess human-AI team performance, not AI alone.

CIOMS distinguishes three models: Human-in-the-loop (humans make final decisions), Human-on-the-loop (humans monitor and can intervene), and Human-in-command (humans set parameters and monitor aggregate performance). High-risk decisions like causality assessment require HITL. The model must match risk level.

Data quality issues due to inadequate governance, algorithmic bias from unrepresentative training data, validation gaps failing to demonstrate performance across diverse populations, insufficient human oversight documentation, and inadequate change control for model updates. Successful implementations address these proactively through robust governance frameworks.