AI in GxP environments can deliver significant efficiency and compliance gains—but only when the foundations for governance, validation, and oversight are in place. Learn when to deploy confidently and when to wait.

Did you know, “AI in GxP compliance is moving from theory to implementation across the pharmaceutical sector…”

Why now — the compliance clock is ticking

Artificial intelligence (AI) is no longer experimental in the pharmaceutical sector, including Australia. From pharmacovigilance to GMP manufacturing, AI-driven systems are cutting documentation time, predicting equipment failures, and improving data reliability.

Yet 2025 marks a turning point.

In July 2025, the TGA published its outcomes report, “Clarifying and Strengthening the Regulation of Medical Device Software, Including Artificial Intelligence (AI)”. ISO/IEC 42001 is referenced by the TGA as one of several relevant AI standards, and, like other ISO standards, it is voluntary rather than mandated. Meanwhile, in Europe, the European Medicines Agency (EMA) released Annex 22 —the first dedicated GxP framework for AI systems—draft under consultation from July to October, 2025.

For quality, regulatory, and pharmacovigilance professionals, the question is no longer if AI will transform GxP operations—it’s when your organisation is truly ready to deploy and responsibly use it. The key challenge lies not just in the timing of deployment, but also in clearly defining who will use the AI and the specific GxP-relevant use cases it will support. Deploy a system without clearly defined roles or appropriate applications, and you risk inspection findings, data integrity failures, and patient safety concerns. Wait too long to adopt it responsibly, and you forfeit the competitive advantages and operational efficiencies that your peers are already realising.

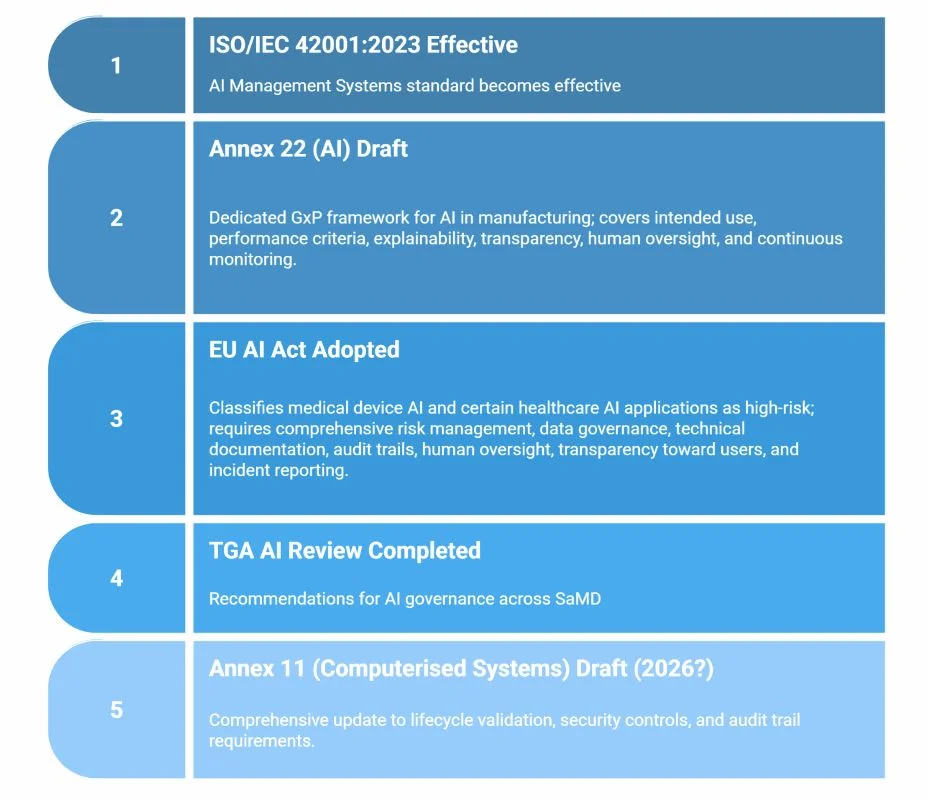

AI enters the GxP rulebook

Source: EMA, ISO, TGA, EC (accessed Nov 2025).

When to Deploy — Six criteria for confident adoption

A) Clear business case, measurable benefit

Definition: AI should solve a defined problem and outperform the current human-only baseline.

Change: Regulators now expect context-based performance evaluation, not generic accuracy claims.

Impact: Demonstrable improvement in speed, quality, or consistency supports inspection defence.

Evidence: Performance logs comparing human-plus-AI vs. human-only accuracy, deviation trends, and turnaround times.

Action:

- Document the baseline process metrics.

- Define “better” (e.g., ≥15 % faster, ≤5 % critical errors).

- Pilot limited-scope use cases (e.g., literature screening).

- Record validation results and decision rationale.

In short: Deploy when performance evidence exists—not optimism.

B) Acceptable risk profile with human oversight

AI belongs where failure is detectable and correctable.

Lower-risk use cases: literature screening, document drafting, data aggregation, predictive maintenance.

Higher-risk (needs human confirmation): batch release, safety signal evaluation, deviation criticality assessment.

The draft Annex 22 emphasises risk-based human oversight rather than mandating human review of every AI decision. It introduces human-in-the-loop concepts where appropriate to the risk, but does not require HITL in every scenario.

Action:

- Define mandatory human-in-the-loop (HITL) steps.

- Log each override or human decision.

- Include HITL verification in validation and SOPs.

In short: “AI suggests, humans decide” is now regulatory doctrine.

C) Data quality and governance foundations

AI is only as reliable as its inputs. In GxP, poor data equals compliance risk.

TGA and EMA both align with ALCOA+ principles—Attributable, Legible, Contemporaneous, Original, Accurate, Complete, Consistent, Enduring, Available.

Action:

- Audit data provenance and traceability.

- Validate training datasets for bias and completeness.

- Establish data ownership and integrity controls.

- Conduct pre-deployment data integrity audits.

- Integrate AI data handling within your QMS.

Related reading: Quality and ALCOA+ Principles: Building Data Integrity You Can Defend Under Inspection.

D) Vendor credibility and GxP capability

General-purpose AI tools are not automatically compliant. A qualified vendor must show:

- Understanding of GxP and risk-based validation (GAMP 5 2e);

- Audit trails for AI-driven actions;

- Explainability of model outputs;

- Change control and performance monitoring.

Action:

- Perform vendor qualification (audit or documented questionnaire).

- Require validation documentation (URS, IQ/OQ/PQ summaries).

- Capture model version and retraining logs.

- Reject “black-box” vendors who can’t provide traceability.

E) Organisational AI literacy and capability

Technology succeeds only when people understand it.

Action:

- Train by role: awareness → user → governance → technical.

- Define escalation pathways for AI anomalies.

- Build AI competency into QA/RA job descriptions.

- Encourage pilot learning and sandbox testing.

In short: Skills before scale.

F) Regulatory alignment and validation strategy

2025 is a transition year, not a year of regulatory certainty:

- EMA Annex 22 (AI lifecycle and human oversight)

- ISO/IEC 42001:2023 (voluntary AI management system standard)

- FDA CSA (Computer Software Assurance)

- TGA AI Review 2025

Action:

- Define AI intended use and context of use.

- Apply risk-based validation—higher rigour for higher consequence.

- Maintain validation packages and continuous monitoring logs.

- Schedule periodic re-validation (e.g., annual + on model change).

In short: Validate once; monitor always.

When to Wait — Eight red flags that mean “not yet”

| Red Flag | Why It Matters | What to Fix Before Deployment |

|---|---|---|

| No governance framework | Uncontrolled use violates TGA/EMA expectations | Create policy and AI oversight committee |

| No human-in-the-loop control | Non-compliant with Annex 22 & EU AI Act | Redesign workflow for human approval |

| Data provenance unknown | Breaches ALCOA+ integrity | Conduct data lineage audit |

| Staff untrained in AI | Leads to misuse and unreported errors | Provide role-based AI literacy training |

| Vendor lacks explainability | Inspection risk ("black box") | Require explainable model outputs |

| No validation plan | Violates GAMP 5 principles | Draft risk-based validation strategy |

| Regulatory ambiguity | Unclear authority expectations | Seek pre-submission advice from TGA/EMA |

| Insufficient resources | Incomplete controls = inspection gap | Secure budget for validation & monitoring |

Comparison — Deploy vs Wait Decision Grid

Alt text: Table comparing “Deploy vs Wait” readiness signals for AI in GxP systems.

| Readiness Signal | Deploy Now | Wait If |

|---|---|---|

| AI use covered by SOPs and change control | Yes | No governance |

| Human oversight roles defined | Yes | No reviewer accountability |

| Data integrity (ALCOA+) met | Yes | Data siloed / incomplete |

| Vendor provides validation pack | Yes | Opaque or no documentation |

| Risk assessment completed | Yes | Undefined context or controls |

| Staff trained & competent | Yes | Limited AI understanding |

In short: If you can’t audit it, don’t deploy it.

Local Context — Australia’s regulatory trajectory

Sponsors deploying AI early should therefore take cues from Annex 22 and ISO/IEC 42001 when structuring governance and validation, to stay aligned with emerging best practice and likely future expectations

While not formally adopted as regulation, these frameworks are referenced as useful models for ensuring robust governance, risk management, and accountability in AI-enabled medical devices and manufacturing software. AI used within manufacturing or pharmacovigilance will need to demonstrate:

- Documented intended use and risk assessment;

- Human-in-the-loop control;

- Validation proportional to risk;

- Audit trail and data integrity compliance.

Although Annex 22 is EU-specific, its principles could guide Australian regulatory updates. Sponsors deploying AI early should therefore structure governance and validation according to both Annex 22 and ISO 42001 expectations to ensure forward compatibility.

Readiness Checklist — Before you deploy AI in GxP

- Map AI use cases and classify by risk.

- Confirm data quality meets ALCOA+.

- Draft and approve AI governance SOPs.

- Validate vendor and obtain compliance documentation.

- Establish HITL checkpoints and escalation process.

- Document validation (URS → IQ/OQ/PQ → monitoring).

- Provide role-based AI training.

- Plan periodic re-validation and drift monitoring.

- Maintain inspection-ready documentation pack.

- Engage Quality early—don’t retrofit compliance later.

Checklist adapted from ISO 42001 (§5–8), GAMP 5 (2e), EMA Annex 22 draft (2025), and TGA AI/software compliance guidance (2025)

Frequently Asked Questions (FAQs)

Yes, if it meets existing medical device and GMP requirements (intended use, classification, essential principles, data integrity, etc.). This aligns well with TGA’s statement that the existing framework is “largely appropriate” for AI, with refinements to come.

Not formally —Annex 22 is EU GMP guidance (draft consultation closed 7 October 2025; final version pending). However, its principles (intended use, explainability, continuous monitoring, independent test data) are expected to influence future PIC/S GMP standards. As Australia follows PIC/S guidelines, these principles may inform local inspection expectations once Annex 22 is finalised and adopted by PIC/S.

Apply proportional effort: the higher the potential patient or product impact, the deeper the validation and testing. Keep validation evidence inspection-ready.

That’s considered a change and triggers re-validation or documented impact assessment. Use controlled retraining protocols and maintain model version logs.

No. Both EMA Annex 22 and the EU AI Act require qualified human oversight. AI can assist, not replace, human accountability.

References

- EMA. Annex 22 – Artificial Intelligence Systems in GMP Environments (Draft), July–Oct 2025 (consultation).

- TGA. AI in Medical Devices and GxP Context – Review Findings, July 2025.

- EU Commission. EU AI Act, adopted 2024

- ISO/IEC 42001:2023. Artificial Intelligence Management Systems – Requirements.

- ISPE’s GAMP® 5: A Risk-Based Approach to Compliant GxP Computerized Systems (Second Edition)

- FDA. Computer Software Assurance Guidance, 2025.

- PIC/S. Annex 11 Computerised Systems (Revision projected 2026).

Internal resources

- ALCOA+ in Practice — understanding data integrity in GxP (Carl Insight).

- AI Governance & Strategy — how Carl supports inspection-ready deployment.

Disclaimer

The information in this document is provided for general information and educational purposes only. It does not constitute legal, regulatory, or compliance advice, and should not be relied upon as a substitute for professional judgement.