Written by: Carl Bufe

Human-in-the-Loop AI in Pharma is not a convenience feature in regulated work—it is a control that anchors accountability, data integrity, and patient safety. In pharmaceutical regulatory affairs, HITL ensures a qualified person reviews, validates, and approves AI-assisted outputs before they enter the regulatory record.

When an inspector asks who approved your AI-generated regulatory submission, your answer determines compliance status or the need to explain findings. Human-in-the-Loop (HITL) is not just AI supervision—it’s regulatory control that anchors legal accountability, data integrity, and patient safety.

Regulatory teams face pressure to adopt AI tools promising faster submissions and smarter surveillance. Yet beneath the efficiency sits a compliance question many haven’t addressed: who takes responsibility when the AI gets it wrong?

What You’ll Learn – Human-in-the-Loop AI in Pharma

By the end of this article, you’ll be able to identify HITL as a core regulatory control, recognise when and where authorities expect human validation, distinguish between HITL and HOTL for AI oversight, picture what HITL looks like operationally, and understand when relying on HITL fails to protect against liability.

Recommended for: Regulatory affairs professionals, QPPVs, quality managers, and compliance leads evaluating AI tools for GxP environments

Why Human-in-the-Loop AI in Pharma Is Being Misunderstood

The Definition Gap

Most organisations treat HITL as a user experience feature—a review step before publishing AI outputs. This misses the regulatory point.

Human-in-the-Loop:

- A control mechanism requiring active human participation in each decision cycle. The AI generates output, a qualified person reviews it against regulatory standards, and that person formally accepts or rejects it before it becomes part of the regulatory record.

Under 21 CFR Part 11 and EU GMP Annex 11, electronic systems must be validated, auditable, and operate under documented controls that ensure accountability.

While these regulations do not explicitly mandate HITL, they require that all electronic records be attributable to qualified individuals through unique user authentication and electronic signatures.

For AI-generated regulatory content, this accountability framework effectively requires human review and approval before records are finalised—a workflow consistent with HITL principles, even if not mandated by that specific terminology.

The Accountability Anchor

AI systems don’t hold regulatory accountability—qualified persons do.

CIOMS Working Group XIV’s draft report on AI in pharmacovigilance provides the most comprehensive guidance to date on human oversight models. CIOMS defines (Human-in-the Loop) HITL as a control mechanism in which ‘the decision is the result of a human-machine interaction’—ensuring that humans remain legally responsible for decisions affecting patient safety.

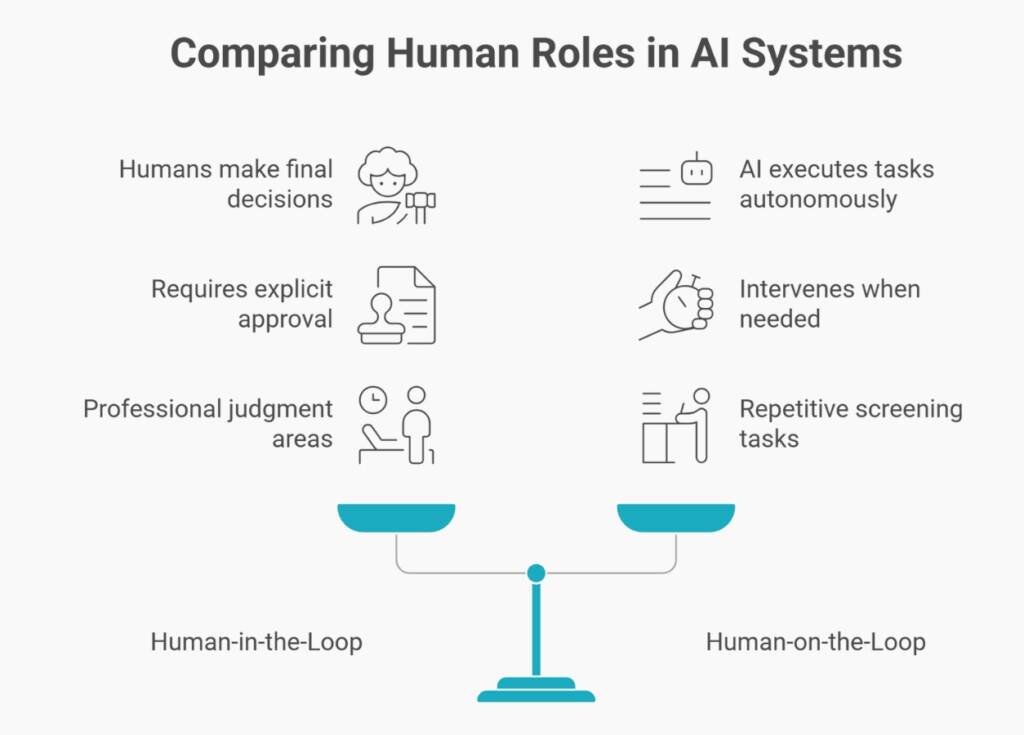

The report distinguishes HITL from Human-on-the-Loop (HOTL), where AI operates autonomously under human monitoring, and Human-in-Command (strategic oversight), applying these approaches based on risk level.

Pharmaceutical regulatory submissions carry legal weight. AI-generated Clinical Study Reports still require the signatures of medically qualified individuals. AI-drafted Risk Management Plans still demand safety expert approval. AI accelerates drafting—humans’ own decisions.

Why This Matters: The key takeaway is that inspection findings on AI oversight usually highlight governance failures—organisations lack clarity on who approves AI outputs, which standards reviewers apply, and how approvals are documented for compliance.

This accountability structure is why Human-in-the-Loop AI in Pharma functions as a regulatory control rather than a technical safeguard.

Regulatory Principle

“For high-risk outputs, Human-in-the-Loop AI is the minimum defensible control.”

Where Regulators Actually Expect Humans to Intervene

High-Risk Applications Requiring Human-in-the-Loop AI in Pharma

| Risk | AI application examples | Required oversight |

|---|---|---|

High-Risk | Pharmacovigilance causality assessments, final product labelling, clinical trial safety reports | Mandatory HITL: Qualified person reviews every output, validates source data, applies medical judgment, and provides documented approval |

Medium-Risk | Literature surveillance screening, regulatory intelligence aggregation, document translation | Human-on-the-Loop acceptable: AI executes tasks autonomously with performance monitoring and defined intervention thresholds |

Low-Risk | Internal meeting summaries, training material formatting, retrospective data mining | Human-in-Command sufficient: Strategic oversight with periodic review of aggregated outputs |

ICH GCP E6(R3) expects sponsors’ oversight of all delegated activities (Principle 10.3) and requires that computerised systems be validated based on their impact on participant safety and data reliability (Principle 9.3).

While the guideline does not explicitly address AI or mandate HITL by name, its accountability framework establishes that sponsors cannot delegate away responsibility for critical decisions—a principle that supports HITL implementation for high-risk AI applications.

When consultants use AI for regulatory drafting, sponsors must verify that appropriate human validation occurred before submission.

For high-impact regulatory activities, Human-in-the-Loop AI in Pharma is the only defensible oversight model under GxP principles.

The TGA Context

Australian organisations face specific TGA expectations around data integrity and accountability. As Australian organisations implement ICH E6(R3)’s accountability principles, auditors may inquire about oversight mechanisms for AI-assisted work, though HITL is not explicitly required by the guideline.

Auditors verify that documented SOPs define AI boundaries, that approval authorities are clear, that audit trails distinguish AI from human actions, and that competency requirements are in place for reviewers.

What Auditors Generally Look for:

- Evidence of qualified review: Documentation showing the reviewer’s credentials, what they validated, and what changes they made

- Version control integrity: Traceable draft-to-final transitions with AI content clearly labelled before approval

- Performance monitoring: Records tracking AI accuracy, flagging anomalies, adjusting workflows when quality drops

Human-in-the-Loop AI in Pharma vs Human-on-the-Loop Oversight

Operational Risk Boundaries

- Human-in-the-Loop (HITL) puts humans in the decision pathway: AI proposes, humans dispose. Every output requires explicit approval before it is entered into regulatory records. This applies where professional judgment is non-delegable, such as medical assessments, safety determinations, and regulatory strategy.

- Human-on-the-Loop (HOTL) positions humans as monitors. AI executes tasks within defined parameters. Humans watch performance and intervene when systems operate outside acceptable bounds. This suits repetitive screening—literature searches, formatting checks, completeness validations.

Operational vs. Performative HITL differentiates compliance from quality.

The Professional Liability Line

What HITL Looks Like in Day-to-Day Regulatory Work

Document Drafting and Approval

- System Action: AI generates a draft, logged with model version and timestamp

- Human Review: Writer validates factual claims against sources, checks citations, and adjusts language

- Documented Changes: Track-changes preserves AI output and human refinement separately

- Formal Approval: Qualified person applies an electronic signature, creating the required audit trail

Pharmacovigilance Case Processing

Regulatory Intelligence

When HITL Controls Break Down

Conclusion: The Regulatory Architect Role

Common Questions and Answers

What does Human-in-the-Loop (HITL) mean in pharmaceutical regulatory affairs?

Human-in-the-Loop is a regulatory control where a qualified human must actively review, validate, and approve AI-generated outputs before they become part of the regulatory record.

Why is Human-in-the-Loop considered a regulatory control rather than a quality feature?

Because regulators use HITL to anchor legal accountability, data integrity, and patient safety to a named, qualified individual—not to the AI system.

Where do regulators require Human-in-the-Loop for AI use in GxP environments?

HITL is mandatory for high-risk activities such as pharmacovigilance causality assessments, clinical trial safety reporting, and final regulatory submissions.

What is the difference between Human-in-the-Loop and Human-on-the-Loop in regulatory AI oversight?

Human-in-the-Loop requires explicit human approval of every AI output, while Human-on-the-Loop allows autonomous AI operation with human monitoring and intervention only when performance drifts.

How does ICH E6(R3) influence Human-in-the-Loop requirements for AI?

ICH E6(R3) reinforces sponsor accountability for delegated activities, meaning AI-assisted work must still be overseen, validated, and approved by qualified humans.

What evidence do inspectors expect to see for effective Human-in-the-Loop implementation?

Inspectors look for documented reviewer qualifications, traceable audit trails separating AI and human actions, and records showing active validation—not rubber-stamp approvals.

When does Human-in-the-Loop fail to protect organisations from regulatory or legal liability?

HITL fails when reviews are superficial, reviewers lack competency, audit trails are generic, or approval volume overwhelms human capacity—turning oversight into compliance theatre.

Disclaimer

This article is provided for educational and informational purposes only. It is intended to support general understanding of regulatory concepts and good practice and does not constitute legal, regulatory, or professional advice.

Regulatory requirements, inspection expectations, and system obligations may vary based on jurisdiction, study design, technology, and organisational context. As such, the information presented here should not be relied upon as a substitute for project-specific assessment, validation, or regulatory decision-making.

For guidance tailored to your organisation, systems, or clinical programme, we recommend speaking directly with us or engaging another suitably qualified subject matter expert (SME) to assess your specific needs and risk profile.