Important Note: This article discusses a draft guidance document currently under consultation. PIC/S Annex 22 has not been finalised or implemented as of December 2025.

AI in pharmaceutical manufacturing is moving from experimental to regulated. In July 2025, the Pharmaceutical Inspection Co-operation Scheme (PIC/S), in partnership with the European Medicines Agency (EMA), released Annex 22 for stakeholder consultation—the first dedicated GMP framework proposal for artificial intelligence and machine learning in medicine production.

The draft Annex 22 sets proposed boundaries: which AI systems could work in critical manufacturing, how to validate them properly, and what controls are expected when the guidance finalises.

If you manufacture medicines under GMP and use AI in any area that affects product quality, patient safety, or data integrity, this proposed framework will likely apply to you once adopted.

Why Annex 22 Matters

Previous guidance tried forcing AI into frameworks built for traditional software. The original Annex 11 (Computerised Systems) was written before modern machine learning existed. It assumes deterministic systems—identical inputs always produce identical outputs.

AI doesn’t work that way.

Machine learning models find patterns in data and make predictions based on probabilities. They can produce different results from identical inputs depending on training data, hyperparameters, or random initialisation. Some systems learn continuously from new data, adapting behaviour during operation.

That unpredictability challenges GMP principles. When your automated system classifies a batch as acceptable or flags a critical deviation, regulators and patients need confidence that the decision is reproducible, explainable, and traceable.

The draft Annex 22 proposes to resolve this by drawing clear lines: some AI architectures could work in critical applications if properly controlled, while others would be prohibited.

Note: Concurrently with Annex 22, PIC/S and EMA released a revised Annex 11 that updates the broader computerised systems framework to complement the AI-specific guidance.

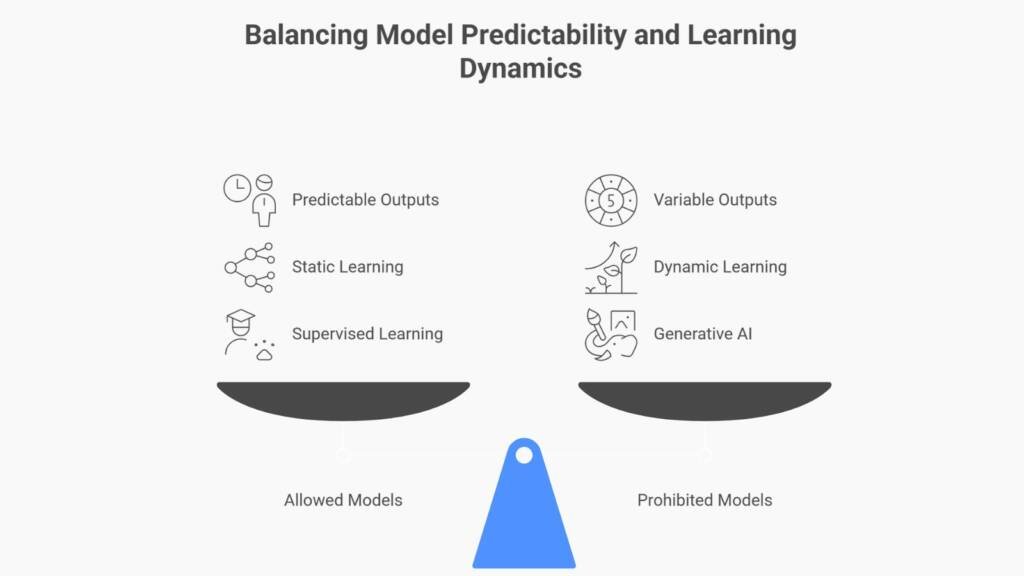

The Core Proposed Requirement: Static, Deterministic Models Only

Here’s the fundamental constraint proposed in the draft: only static AI models with deterministic outputs may be used in critical GMP applications.

What this means in practice:

Potentially Allowed:

Models where parameters freeze after training

Systems producing identical outputs from identical inputs every time

Supervised learning models that classify or predict based on validated data patterns

Proposed as Prohibited:

Dynamic models that continue learning during routine operation

Non-deterministic systems produce variable outputs from identical inputs

Generative AI and Large Language Models (LLMs) in critical contexts

Any system where behaviour changes without explicit validation

If you’ve deployed adaptive AI that evolves with new production data, be aware that the architecture is likely non-compliant with critical applications under the draft guidance. You may need a fundamental redesign before this guidance becomes mandatory.

Clarification: The prohibition targets non-deterministic outputs, not necessarily the underlying mathematical nature of the algorithm. The key is that identical inputs must produce identical, reproducible results.

What Counts as Critical Applications

The draft Annex 22 applies to computerised systems in medicinal product and active substance manufacturing, where AI models predict or classify data affecting:

Product quality: batch disposition, QC results, deviation classification

Patient safety: release decisions, stability predictions, contamination detection

Data integrity: automated review decisions, record classification, audit trail analysis

Non-critical applications—drafting SOPs, training materials, project planning—could use broader AI capabilities under the draft, but only with qualified Human-in-the-Loop (HITL) oversight.

The critical distinction: if the AI decision directly affects whether medicine reaches patients, it falls under the strictest proposed controls.

Five Key Requirement Areas in the Draft Guidance

Note: The following framework is an interpretive summary to help organisations understand the draft requirements. “Five Pillars” is not official PIC/S terminology.

1. Intended Use Definition—Before Anything Else

Every AI model would require comprehensive intended-use documentation approved by process Subject Matter Experts (SMEs) before acceptance testing begins.

This document must specify:

Complete description of what the model predicts or classifies

Full characterisation of input data, including expected variations

Identification of limitations, boundary conditions, and potential biases

Defined subgroups based on relevant characteristics (geography, equipment types, defect categories)

Clear operator responsibilities when used in HITL configurations

This isn’t checkbox documentation. It serves as the proposed foundation for the validation scope, test data requirements, and acceptance criteria. Without SME approval, the draft guidance indicates you cannot proceed to testing.

2. Test Data Independence—The Non-Negotiable Control

Here’s where most AI validation could fail under the draft: test data must be completely independent from training and validation datasets.

If you split-test data from a shared pool, you must prevent anyone with access to training data from viewing test data. The draft proposes:

Access restrictions with audit trails

Four-eyes principle enforcement

Version control with traceability

Documentation proving independence

This addresses the fundamental risk: if developers see test data, they may optimise models to pass tests rather than demonstrate real-world performance.

3. Representative Sampling and Verified Labelling

Test data must represent the complete input sample space—not just common scenarios.

Required sampling approach:

Stratified sampling covering all subgroups

Inclusion of rare variations and boundary conditions

Documented rationale for selection criteria

Coverage of potential error modes

Label verification requirements:

Test data labels must achieve high correctness through:

Independent expert verification

Validated equipment measurements

Laboratory testing results

Cross-validation by multiple SMEs

The draft emphasizes: you cannot validate an AI model with incorrectly labelled data. Garbage in, garbage out applies fully in GMP environments.

4. Explainability and Confidence Scoring

For critical applications, the draft proposes that AI must explain its decisions in ways humans can verify.

Feature attribution requirements:

Systems must capture and record which input features contributed to each classification. Techniques like SHAP values, LIME, or visual heat maps must highlight key factors driving outcomes.

This would allow auditors and SMEs to verify the model bases decisions on relevant, appropriate features—not spurious correlations.

Confidence scoring:

Models must log confidence scores for each prediction. When confidence falls below thresholds, the system should flag outcomes as “undecided” instead of making unreliable predictions. This creates clear escalation paths for human review when situations fall outside validated experience.

5. Performance Metrics and Acceptance Criteria

Before testing, SMEs must define performance metrics and acceptance criteria at least as stringent as the process being replaced.

Required metrics:

Confusion matrix (true positives, false positives, true negatives, false negatives)

Sensitivity (true positive rate)

Specificity (true negative rate)

Accuracy, precision, F1 score

Metrics specific to intended use

If you cannot quantify your current manual process performance, the draft guidance indicates you cannot validate the AI replacement. This forces an honest assessment of baseline capability before automation.

Proposed Operational Controls After Deployment

Validation wouldn’t end at go-live. The draft Annex 22 mandates continuous controls:

- Change Control: Any change to the model, system, or manufacturing process would require evaluation for retesting. Decisions not to retest must be documented and justified.

- Configuration Management: Models must be under configuration control with measures that detect unauthorised changes. Version control, access restrictions, and audit trails are mandatory.

- Performance Monitoring: Continuous monitoring must detect model drift from defined metrics or changes in input data distribution. Automated alerts should trigger an investigation when performance degrades.

- Input Space Monitoring: Regular checks ensure new operational data remains within the validated input sample space. Statistical measures must detect data drift before it affects model reliability.

What This Could Mean for Different Teams

Quality Assurance

Your audit scope may expand significantly. Inspectors could examine:

AI governance frameworks and policies

Multidisciplinary team composition and qualifications

Documentation traceability from intended use through operation

Training records for AI-specific risks and controls

Audit readiness would demand evidence that AI systems are “controllable, testable, and verifiable”—not just functional.

Data Science Teams

Technical architecture may need to change:

Dynamic learning capabilities must be disabled or removed for critical applications

Data governance requires technical controls ensuring test data independence

MLOps pipelines must incorporate GMP change control and configuration management

Explainability tools must be integrated into the model serving infrastructure

Manufacturing Operations

Process validation must address AI-specific risks:

Supplier qualification now includes AI development documentation requirements

Sensors feeding AI models must be qualified and calibrated

Deviation management expands to cover model performance issues

Batch records must consist of AI decision documentation

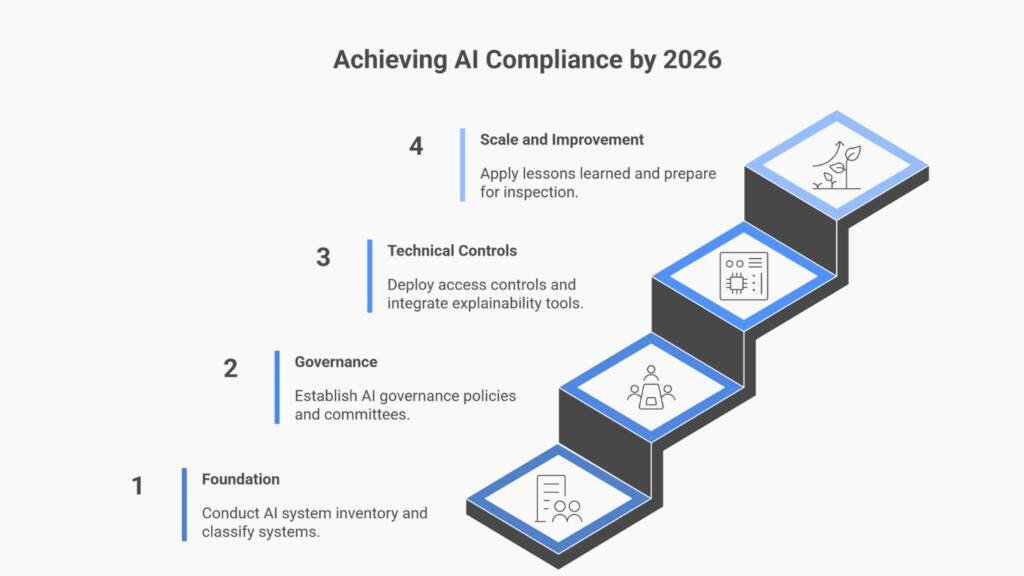

Australian Implementation Context

Important for Australian manufacturers: TGA has not formally adopted Annex 22 yet, but Australia is an active PIC/S member. The TGA participated in the consultation process and invited Australian stakeholders to provide feedback on the draft guidance.

Practical steps for Australian manufacturers:

Engage TGA proactively about AI systems in regulatory submissions

Map Annex 22 draft requirements to existing TGA guidance and your current QMS

Consider aligning with ISO 42001 (AI Management Systems) for comprehensive governance

Document rationale for any variations from the draft Annex 22 in your quality system

Final Status Update

As of December 2025, Annex 22 remains in draft consultation status. This consultation period remained open until October 7, 2025. Organisations should prepare for eventual implementation while recognising that requirements may evolve based on stakeholder feedback. The guidance represents a thoughtful approach to AI governance in GMP environments, but it is not yet an enforceable regulation.

Monitor the PIC/S publications page and EMA updates for the final version and official implementation timeline.

PIC/S Annex 22 & Pharma AI: FAQs

Q: What is PIC/S Annex 22 and its current status?

A: PIC/S Annex 22 is draft guidance released July 7, 2025, for stakeholder consultation, developed jointly by PIC/S and EMA. It proposes the first dedicated GMP framework for AI/ML in pharmaceutical manufacturing, focusing on patient safety, product quality, and data integrity. It is not yet finalised or enforceable.

Q: When will PIC/S Annex 22 become mandatory?

A: No implementation date has been set. Following the consultation period (July–October 2025), PIC/S and EMA will review stakeholder feedback before finalising the guidance. Organisations should monitor official PIC/S publications for the final version and implementation timeline.

Q: How does Annex 22 relate to the revised Annex 11?

A: Annex 22 complements the concurrently revised Annex 11 (Computerised Systems). While Annex 22 addresses AI-specific requirements, the revised Annex 11 provides updated foundational requirements for all computerised systems. Both were released together and should be implemented as a comprehensive framework.

Q: Does Annex 22 apply to Australia?

A: As a PIC/S member, Australian manufacturers will likely need to comply once finalised.

Q: What should we do during the draft phase?

A: Conduct gap assessments against draft requirements, inventory AI systems, classify critical vs. non-critical applications, and prepare cross-functional governance structures. Use this period to proactively prepare while awaiting final guidance.

Q: Are there any transitional provisions expected?

A: The draft does not specify transitional provisions. Organisations should prepare for implementation once finalised, but may need to seek clarification from PIC/S or national inspectorates about transition timelines for existing AI systems.