The Foundation: What Is ALCOA+?

Why ALCOA+ Matters More Than Ever

Paper made ALCOA+ intuitive: ink signatures stick, documents don’t vanish, filing cabinets enforce version control.

Electronic systems shattered that simplicity.

Today’s auditors expect auditable automation, validated systems, and traceability across spreadsheets, cloud platforms, and AI-assisted workflows. One shadow database, one unapproved document copy, one undocumented AI summary becomes a 483 observation—or worse.

For pharmacovigilance teams, GCP auditors, and quality systems managers, ALCOA+ is your assurance framework. It’s non-negotiable when you:

Adopt AI tools for case summaries, literature screening, or document drafting. Every AI-assisted output needs documentation: which tool, who used it, how the output was reviewed for accuracy. AI-generated case narratives or flagged literature articles must be traceable and attributable—or inspectors won’t accept them as validated evidence.

Scale across regions. TGA, EMA, FDA expect the same ALCOA+ standards. No “light-touch” documentation in one geography.

Reduce manual workload without increasing risk. Stretched teams are tempted to shortcut documentation. ALCOA+ discipline closes exactly those gaps.

Prepare for inspections. The inspector’s first question: “Show me how you know that’s true.”

ALCOA+ is your answer.

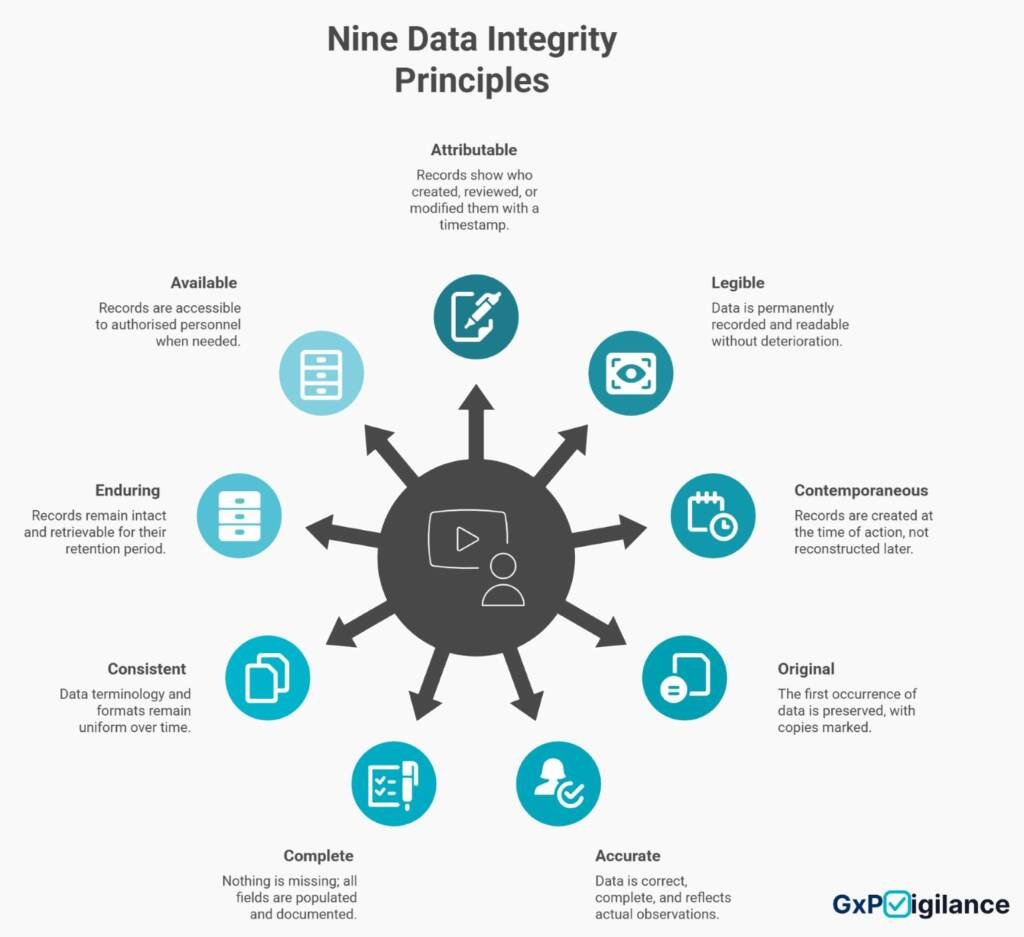

The Nine Principles in Practice: A GxP Lens

Each ALCOA+ principle maps directly to scenarios you face daily. Here’s what they mean when the inspector arrives.

Attributable: Who Did What, When

A pharmacovigilance case lands in your system. Someone must review it—and that someone must be identifiable, permanently.

Electronic signatures (21 CFR Part 11) or role-based access with timestamped logs. When Sarah marks a case “non-serious,” that attribution stays. Corrections add new entries; they never overwrite.

Red flag: Excel spreadsheets with no audit trail. Shared mailboxes with no individual accountability.

Legible: Readable Now, Readable in 2045

Data must survive format changes, system migrations, and regulatory scrutiny decades from now.

Paper records in permanent ink, acid-free storage. Electronic records in stable formats (PDF/A, validated databases) with documented recovery procedures. When migrating QMS platforms, preserve original attributes intact.

Red flag: Critical records on a departing employee’s laptop. Proprietary formats that only legacy software can open.

Contemporaneous: Real-Time, Not Reconstructed

Records created when the action happens—not transcribed from memory later.

Audit notes dated 15 November must be created on 15 November. CRF pages completed day-of-visit. Safety reviews are timestamped in real-time. Corrections added as amendments, not replacements.

Red flag: Backdated entries. Narrative reviews were written weeks after the event, with no documentation explaining the delay.

Original: The First Record is Truth

The original is your source of truth. Copies marked as copies. Versions tracked, not overwritten.

Safety report initially misclassified as “serious” then corrected? Both records exist in the audit trail, including the original classification and any subsequent amendments, along with their justifications.

Document v1.0, then v2.0, v3.0 with change logs. Working drafts are archived separately.

Red flag: Overwritten files with no version history. “Master copies” updated in place with no baseline.

Accurate: Alignment with Reality

Data reflects what was actually observed or done—not what should have happened.

Cases reviewed for completeness before closure. Audit findings verified against source documents before sign-off. Data transcription validated.

Red flag: Cases closed without full narrative review. Audit reports issued without the auditee’s comment. Data entry without verification checks.

Complete: Nothing Missing

All required fields, supporting documents, and full context. Incomplete records create gaps that regulators question.

QMS flags incomplete records. Mandatory fields enforced. Completeness checklists before external submission. All supporting documents are retained for the full retention period.

Red flag: Cases marked “pending information” for months. Audit reports without backup data. Archived records with missing attachments.

Consistent: Uniform Standards

Same terminology, formats, processes across teams and over time.

One team’s “SAE” is everyone’s “SAE.” Style guides and terminology standards are maintained. Standardised workflows—same review steps, same order. When standards change, policy revision should occur with an effective date, not ad hoc variations.

Red flag: Different teams using different SOP versions. Terminology is changing between records. Process variations based on individual preference.

Enduring: Built for Decades

Records intact, retrievable, unaltered for entire retention period—often years or decades.

Validated servers with redundancy. Quarterly backup testing. Archive records in a separate, secure location. Access controls prevent accidental overwriting. Documented retention schedules enforced.

Red flag: Single-point backups. Records on personal devices. No disaster recovery plan.

Available: Retrievable When Needed

Authorised personnel retrieve records in usable format without unreasonable delay.

Regulator requests Pharmacovigilance cases from a specific date range? You produce them within hours. Records indexed, tagged, and stored in standard formats (PDF, Excel, databases).

SOPs centralised, version-controlled, searchable—not scattered across shared drives.

Red flag: Records locked in legacy systems requiring special software. No retrieval procedure. Critical SOPs in one person’s email archive.

ALCOA+ and Emerging Technology: Staying Defensible as You Automate

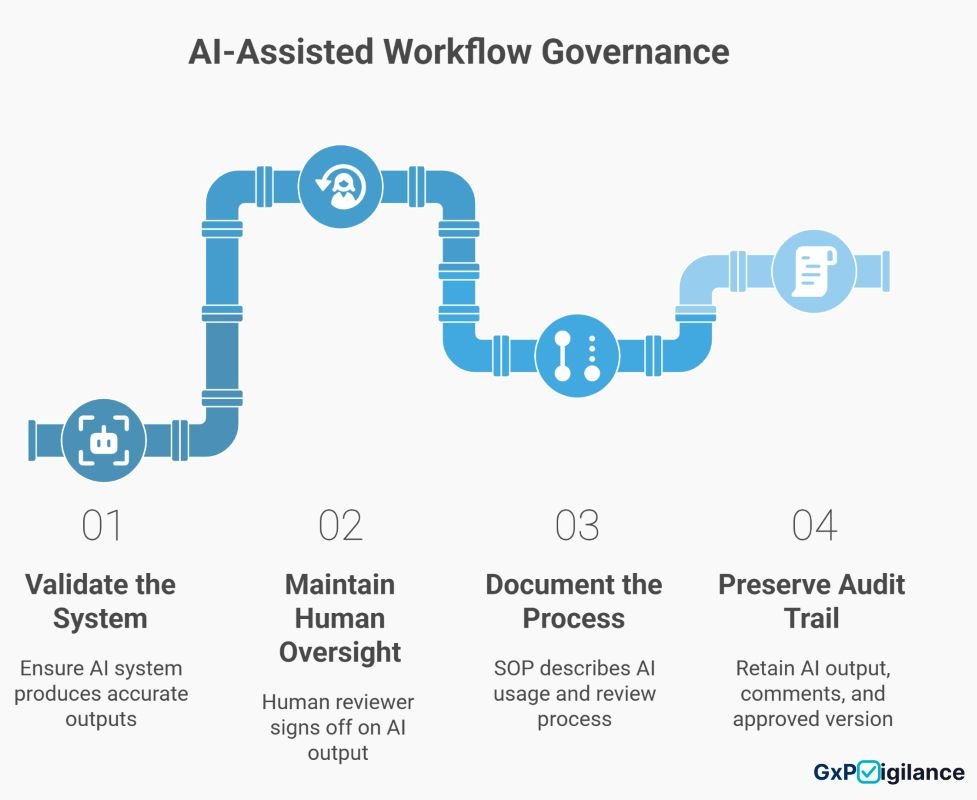

Here’s the challenge: as you adopt AI tools, workflow automation, and cloud systems, ALCOA+ compliance gets harder, not easier. A generative AI tool that drafts a case summary must produce traceable, auditable output, or it fails ALCOA+.

Consider a common scenario: Your team uses an AI tool to draft pharmacovigilance narratives, saving significant time. But the AI’s reasoning is opaque. How is that attributable? Who is responsible for accuracy if the AI makes an error? Is the output original, or is it a derivative that must be marked as such?

The solution is governance first, technology second:

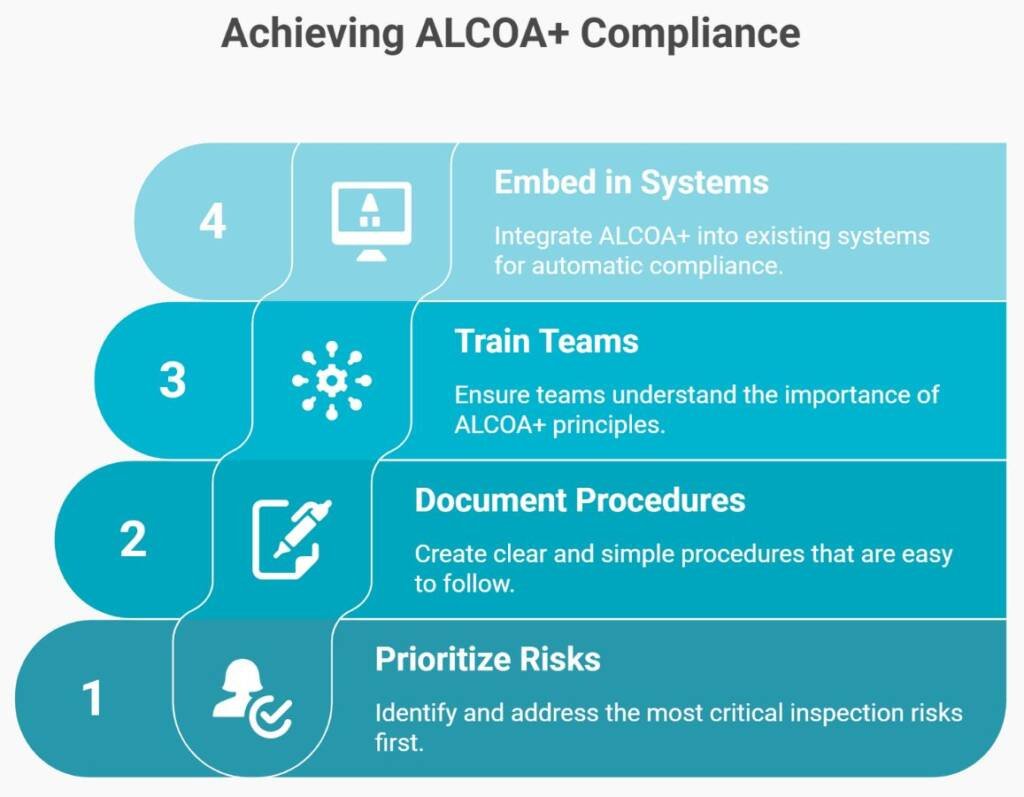

The Practical Path Forward

1 Comment

Comments are closed.