Why AI Governance Matters

When AI Enters Regulated Healthcare Operations

AI tools promise speed and consistency. But in pharmaceutical manufacturing, pharmacovigilance, clinical trials, and pharmacy operations, every AI output becomes a record. Every decision needs an audit trail. Every recommendation requires human verification.

Your regulators want answers: Who approved this AI system? How do you know it works correctly? What happens when it makes a mistake? Can you explain its recommendations? Who takes responsibility?

We help you answer these questions before inspectors ask them.

I’m Carl Bufe—The AI-Native GxP Practitioner. I don’t sell AI governance frameworks and disappear. I build working governance systems alongside your team, thoroughly document the controls, train your personnel to maintain them, and ensure you’re inspection-ready.

What AI Governance Actually Means

AI governance defines who makes decisions, who approves them, who monitors the process, and who is responsible for addressing issues that arise.

Without governance:

- Teams deploy ChatGPT for case summaries without validation

- Vendors promise “AI-powered” tools nobody can explain

- Data scientists build models that quality teams can’t audit

- Inspection findings cite inadequate oversight and missing documentation

With governance:

- You know which AI tools run where, doing what

- Each system has documented validation, risk assessment, and performance monitoring

- Human oversight sits exactly where medical or regulatory judgment matters

- Audit trails prove you controlled the technology—not the other way around

This isn’t about stopping AI. It’s about deploying it safely within your existing quality systems and regulatory obligations.

Request an AI Governance Consultation

What You Gain

- Clear Ownership

Every AI application maps to a responsible person, approval authority, and escalation path. No more “the AI said so” defences. - Risk-Based Controls

We classify each use case by impact and risk. Literature surveillance automation needs different controls than clinical decision support. You apply the right level of oversight without creating bureaucracy. - Regulatory Alignment

Your governance framework references ISO 42001 (AI management systems), GAMP 5 (computerised system validation), and ICH E6 R3 (clinical trial systems) so auditors recognise familiar territory. - Data Integrity Protection

AI outputs link back to approved sources. Version control tracks model changes. Audit trails record who saw what recommendation and what decision they made. - Human-in-the-Loop Assurance

Where judgment matters—safety assessments, protocol deviations, quality decisions—humans review, approve, and take accountability. The AI assists. Humans decide. - Practical Implementation

Governance integrates into your QMS, CAPA system, change control, and vendor qualification processes. It becomes how work gets done, not extra paperwork.

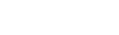

Our Process

We embed governance into your operations—not as an afterthought, but as a strategic enabler of safe adoption.

Regulatory Context

AI governance is not a checkbox—it’s a living framework that evolves with your operations and the rules around you. Our approach is informed by widely referenced guidance so your controls feel familiar to auditors and ethics boards, without implying certification:

- ISO 42001 (AI Management Systems) — used as a reference for lifecycle thinking and accountability.

- GAMP 5 (2nd Edition) — influences how we approach validation evidence, change control, and data integrity for AI-enabled systems.

- ICH E6 (R3) — informs expectations for computerised systems and oversight in clinical research.

- ALCOA++ — shapes data integrity practices for records and audit trails.

- EU GMP Annex 11 / Annex 22 (where applicable) — informs computerised system control and emerging AI considerations in GMP.

- FDA and other regulators’ AI/ML communications — inform lifecycle maintenance, transparency, and monitoring themes.

Integration Across the Organisation

We weave AI governance directly into your operational ecosystem, aligning:

- Quality Management (QMS): CAPA, deviation handling, change control

- Computerised System Validation (CSV): Lifecycle documentation and testing

- Data Integrity & IT Governance: Access controls, audit trails, retention

- Supplier Oversight: Qualification and assurance for third-party AI tools

- Risk Management: QRM integration and AI-specific risk assessment

This unified structure ensures AI strengthens—rather than disrupts—your compliance foundations.

Who This Service Is For

- Pharmaceutical Manufacturers are introducing AI to production monitoring or quality control

- Pharmacovigilance & Safety Teams applying AI to case triage, literature surveillance, or signal detection

- Sponsors & CROs using AI for protocol writing, patient selection, or risk-based monitoring

- Regulatory Affairs teams are strengthening submission confidence and provenance documentation.

- Hospital & Research Operations overseeing clinical decision support and research systems

- Pharmacy Services builds safe, auditable AI assistance for compounding or medication review

- Data Science Teams needing GxP-aware guardrails and validation frameworks

.

Defining the Boundaries of Safe AI

What We Won’t Do

- Build Software

- Deploy AI without validation or risk assessment

- Skip human oversight where medical or regulatory judgment is required

- Treat governance as compliance theatre—checkbox exercises that don’t protect anyone

- Implement controls so bureaucratic that they prevent practical work

What We Will Do

- Build governance frameworks that your team can actually operate

- Document everything to inspection-ready standards

- Train your people to assess risk, monitor performance, and maintain controls

- Set honest expectations about what AI can and can’t do safely

- Track outcomes transparently—measure governance effectiveness, not just policy existence